Updated March 2023: using K3s 1.26 and MetalLB 0.13.9

Updated March 2023: using K3s 1.26 and MetalLB 0.13.9

By default, K3s uses the Traefik ingress controller and Klipper service load balancer to expose services. But this can be replaced with a MetalLB load balancer and NGINX ingress controller.

But a single NGINX ingress controller is sometimes not sufficient. For example, the primary ingress may be serving up all public traffic to your customers. But a secondary ingress might be necessary to only serve administrative services from a private internal network.

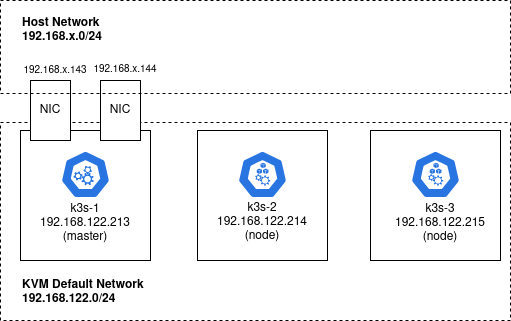

In this article, we will use two MetalLB IP addresses on the primary K3s host and then project different deployments to the primary versus the secondary NGINX ingress controller to mimic public end-user versus private administrative access.

And if you would rather run all the steps in this article using an Ansible playbook (instead of manually), see the final section “Ansible Playbook“.

Prerequisites

Disabling the default loadbalancer and ingress

This article builds off my previous article where we built a K3s cluster using Ansible. Using that playbook, the k3s systemd service file on k3s-1 “/etc/systemd/system/k3s.sevice” disables both the Klipper servicelb and Traefik ingress.

ExecStart=/usr/local/bin/k3s server --disable servicelb --disable traefik

Multiple NIC to support MetalLB endpoints

If you used Terraform from the previous article to create the k3s-1 host, then you already have an additional 2 network interfaces on the master k3s-1 host (ens4=192.168.1.143 and ens5=192.168.1.144).

A K3s cluster is not required. You can run the steps in this article on a single K3s node. But you MUST have an additional two network interfaces and IP addresses on the same network as your host (e.g. 192.168.1.0/24) for the MetalLB endpoints.

Login to K3s master

All the steps in this article will be performed while logged in to the K3s master. If you are using the K3s cluster we created in the previous article, then you can login using:

ssh -i tf-libvirt/id_rsa ubuntu@192.168.122.213

Configure MetalLB to use additional NIC

Per the MetalLB installation by manifest section, install into the metallb-system namespace .

# download manifest wget https://raw.githubusercontent.com/metallb/metallb/v0.13.9/config/manifests/metallb-native.yaml # updating validatingwebhookconfigurations so it does not fail under k3s sed -i 's/failurePolicy: Fail/failurePolicy: Ignore/' metallb-native.yaml # apply manifest kubectl apply -f metallb-native.yaml

Then create a MetalLB IP address pool to configure the IP addresses MetalLB should allocate.

# show ip address, including ens4 and ens5 which are used for MetalLB endpoints

ip a

# get MetalLB configmap template

wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/k3s-metallb/templates/metallb-ipaddresspool.yml.j2 -O metallb-ipaddresspool.yml

# change addresses to MetalLB endpoints

sed -i 's/{{metal_lb_primary}}-{{metal_lb_secondary}}/192.168.2.143-192.168.2.144/' metallb-ipaddresspool.yml

# apply

kubectl apply -f metallb-ipaddresspool.yml

Then validate the created objects.

# show created objects $ kubectl get all -n metallb-system NAME READY STATUS RESTARTS AGE pod/controller-68bf958bf9-rzk79 1/1 Running 0 78s pod/speaker-dclw5 1/1 Running 0 78s pod/speaker-dd5dr 1/1 Running 0 78s pod/speaker-brp8k 1/1 Running 0 78s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/webhook-service ClusterIP 10.43.47.62 443/TCP 79s NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/speaker 3 3 3 3 3 kubernetes.io/os=linux 79s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/controller 1/1 1 1 79s NAME DESIRED CURRENT READY AGE replicaset.apps/controller-68bf958bf9 1 1 1 79s $ kubectl get validatingwebhookconfiguration --field-selector metadata.name=metallb-webhook-configuration NAME WEBHOOKS AGE metallb-webhook-configuration 7 15m

Enable primary NGINX ingress controller

Per the bare-metal NGINX ingress documentation, we will apply the manifest.

# downloads manifest wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.7.0/deploy/static/provider/cloud/deploy.yaml -O nginx-deploy.yaml # replace 'Deployment' with 'Daemonset' # replace 'NodePort' with 'LoadBalancer' sed -i 's/type: NodePort/type: LoadBalancer/' nginx-deploy.yaml sed -i 's/kind: Deployment/kind: DaemonSet/' nginx-deploy.yaml # create NGINX objects $ kubectl apply -f nginx-deploy.yaml namespace/ingress-nginx created serviceaccount/ingress-nginx created serviceaccount/ingress-nginx-admission created role.rbac.authorization.k8s.io/ingress-nginx created role.rbac.authorization.k8s.io/ingress-nginx-admission created clusterrole.rbac.authorization.k8s.io/ingress-nginx created clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created rolebinding.rbac.authorization.k8s.io/ingress-nginx created rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created configmap/ingress-nginx-controller created service/ingress-nginx-controller created service/ingress-nginx-controller-admission created daemonset.apps/ingress-nginx-controller created job.batch/ingress-nginx-admission-create created job.batch/ingress-nginx-admission-patch created ingressclass.networking.k8s.io/nginx created validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created $ kubectl get Validatingwebhookconfigurations ingress-nginx-admission NAME WEBHOOKS AGE ingress-nginx-admission 1 2m # and finally disable the nginx validating webhook for k3s kubectl get Validatingwebhookconfigurations ingress-nginx-admission -o=yaml | yq '.webhooks[].failurePolicy = "Ignore"' | kubectl apply -f - validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured

And now you can verify object creation by looking at the objects in the ‘ingress-nginx’ namespace.

$ kubectl get all -n ingress-nginx NAME READY STATUS RESTARTS AGE pod/ingress-nginx-admission-create-rt7kt 0/1 Completed 0 90s pod/ingress-nginx-admission-patch-mfrkj 0/1 Completed 0 90s pod/ingress-nginx-controller-clrn4 1/1 Running 0 90s pod/ingress-nginx-controller-qfqmx 1/1 Running 0 90s pod/ingress-nginx-controller-s6z8g 1/1 Running 0 90s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ingress-nginx-controller LoadBalancer 10.43.157.182 192.168.2.143 80:32178/TCP,443:31841/TCP 90s service/ingress-nginx-controller-admission ClusterIP 10.43.195.217 443/TCP 90s NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/ingress-nginx-controller 3 3 3 3 3 kubernetes.io/os=linux 90s NAME COMPLETIONS DURATION AGE job.batch/ingress-nginx-admission-create 1/1 14s 90s job.batch/ingress-nginx-admission-patch 1/1 17s 90s

Notice:

- service/ingress-nginx-controller now has an external IP corresponding to our first MetalLB endpoint

- daemonset/ingress-nginx-controller does not use an explicit ‘–ingress-class=xxxx’ in its args, which means it defaults to the ingress class ‘nginx’. So its ingress (when created) will need to use the annotation ‘kubernetes.io/ingress.class: nginx’

Enable Secondary Ingress

To create a secondary ingress, I have put a DaemonSet definition into github which you can apply like below.

# apply DaemonSet that creates secondary ingress

wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/add_secondary_nginx_ingress/templates/nginx-ingress-secondary-controller-v1.7.0.yaml.j2 -O nginx-ingress-secondary-controller.yaml

# set namespace

sed -i 's/{{nginx_ns}}/ingress-nginx/' nginx-ingress-secondary-controller.yaml

$ kubectl apply -f nginx-ingress-secondary-controller.yaml

daemonset.apps/nginx-ingress-secondary-controller created

Then create the secondary ingress service

$ wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/add_secondary_nginx_ingress/templates/nginx-ingress-secondary-service.yaml.j2 -O nginx-ingress-secondary-service.yaml

# set namespace and second MetalLB IP

$ sed -i 's/{{nginx_ns}}/ingress-nginx/' nginx-ingress-secondary-service.yaml

$ sed -i 's/loadBalancerIP: .*/loadBalancerIP: 192.168.2.144/' nginx-ingress-secondary-service.yaml

$ kubectl apply -f nginx-ingress-secondary-service.yaml

service/ingress-secondary created

Now you can see the secondary NGINX Daemonset and Services.

# see primary and secondary nginx controller $ kubectl get ds -n ingress-nginx NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE ingress-nginx-controller 3 3 3 3 3 kubernetes.io/os=linux 13m nginx-ingress-secondary-controller 3 3 3 3 3 <none> 4m15s $ kubectl get services -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller-admission ClusterIP 10.43.174.60 443/TCP 13m ingress-nginx-controller LoadBalancer 10.43.27.211 192.168.2.143 80:32652/TCP,443:31400/TCP 13m ingress-secondary LoadBalancer 10.43.77.104 192.168.2.144 80:30113/TCP,443:30588/TCP 106s

Notice:

- service/ingress-nginx-controller now has an external IP corresponding to our first MetalLB endpoint

- service/ingress-secondary now has an external IP corresponding to our second MetalLB endpoint

- daemonset/nginx-ingress-secondary-controller uses an explicit ‘–ingress-class=secondary’ in its args. So its ingress (when created) will need to use the annotation ‘kubernetes.io/ingress.class: secondary’

Deploy test Services

To facilitate testing, we will deploy two independent Service+Deployment.

- Service=golang-hello-world-web-service, Deployment=golang-hello-world-web

- Service=golang-hello-world-web-service2, Deployment=golang-hello-world-web2

These both use the same image fabianlee/docker-golang-hello-world-web:1.0.0, however they are completely independent deployments and pods.

# get definition of first service/deployment wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/golang-hello-world-web/templates/golang-hello-world-web.yaml.j2 # apply first one kubectl apply -f golang-hello-world-web.yaml.j2 # get definition of second service/deployment wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/golang-hello-world-web/templates/golang-hello-world-web2.yaml.j2 # apply second one kubectl apply -f golang-hello-world-web2.yaml.j2 # show both deployments and then pods kubectl get deployments kubectl get pods

These apps are now available at their internal pod IP address.

# check ClusterIP and port of first and second service

kubectl get services

# internal ip of primary pod

primaryPodIP=$(kubectl get pods -l app=golang-hello-world-web -o=jsonpath="{.items[0].status.podIPs[0].ip}")

# internal IP of secondary pod

secondaryPodIP=$(kubectl get pods -l app=golang-hello-world-web2 -o=jsonpath="{.items[0].status.podIPs[0].ip}")

# check pod using internal IP

curl http://${primaryPodIP}:8080/myhello/

# check pod using internal IP

curl http://${secondaryPodIP}:8080/myhello2/

With internal pod IP proven out, move up to the IP at the Service level.

# IP of primary service

primaryServiceIP=$(kubectl get service/golang-hello-world-web-service -o=jsonpath="{.spec.clusterIP}")

# IP of secondary service

secondaryServiceIP=$(kubectl get service/golang-hello-world-web-service2 -o=jsonpath="{.spec.clusterIP}")

# check primary service

curl http://${primaryServiceIP}:8080/myhello/

# check secondary service

curl http://${secondaryServiceIP}:8080/myhello2/

These validations proved out the pod and service independent of the NGINX ingress controller. Notice all these were using insecure HTTP on port 8080, because the Ingress controller step in the following step is where TLS is layered on.

Create TLS key and certificate

Before we expose these services via Ingress, we must create the TLS keys and certificates that will be used when serving traffic.

- Primary ingress will use TLS with CN=k3s.local

- Secondary ingress will use TLS with CN=k3s-secondary.local

The best way to do this is with either a commercial certificate, or creating your own custom CA and SAN certificates. But this article is striving for simplicity, so we will simply generate self-signed certificates using a simple script I wrote.

# download and change script to executable

wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/cert-with-ca/files/k3s-self-signed.sh

chmod +x k3s-self-signed.sh

# run openssl commands that generate our key + certs in /tmp

./k3s-self-signed.sh

# change permissions so they can be read by normal user

sudo chmod go+r /tmp/*.{key,crt}

# show key and certs created

ls -l /tmp/k3s*

# create primary tls secret for 'k3s.local'

kubectl create -n ingress-nginx secret tls tls-credential --key=/tmp/k3s.local.key --cert=/tmp/k3s.local.crt

# create secondary tls secret for 'k3s-secondary.local'

kubectl create -n ingress-nginx secret tls tls-secondary-credential --key=/tmp/k3s-secondary.local.key --cert=/tmp/k3s-secondary.local.crt

# shows both tls secrets

kubectl get secrets --namespace ingress-nginx

Create NGINX Ingress objects

Finally, to make these services available to the outside world, we need to expose them via the NGINX Ingress and MetalLB addresses.

# create primary ingress $ wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/nginx-ingress/files/golang-hello-world-web-on-nginx.yaml $ kubectl apply -f golang-hello-world-web-on-nginx.yaml ingress.networking.k8s.io/golang-hello-world-web-service created # create secondary ingress $ wget https://raw.githubusercontent.com/fabianlee/k3s-cluster-kvm/main/roles/nginx-ingress/files/golang-hello-world-web-on-nginx2.yaml $ kubectl apply -f golang-hello-world-web-on-nginx2.yaml ingress.networking.k8s.io/golang-hello-world-web-service2 created # show primary and secondary Ingress objects # these have annotations 'kubernetes.io/ingress.class: xxx' # corresponding to the '--ingress-class' of the controller $ kubectl get ingress --namespace default NAME CLASS HOSTS ADDRESS PORTS AGE golang-hello-world-web-service k3s.local 80, 443 37s golang-hello-world-web-service2 k3s-secondary.local 80, 443 33s # shows primary and secondary ingress objects tied to MetalLB IP $ kubectl get services --namespace ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller-admission ClusterIP 10.43.174.60 443/TCP 41m ingress-nginx-controller LoadBalancer 10.43.27.211 192.168.2.143 80:32652/TCP,443:31400/TCP 41m ingress-secondary LoadBalancer 10.43.77.104 192.168.2.144 80:30113/TCP,443:30588/TCP 29m

Validate URL endpoints

The Ingress requires that the proper FQDN headers be sent by your browser, so it is not sufficient to do a GET against the MetalLB IP addresses. You have two options:

- add the ‘k3s.local’ and ‘k3s-secondary.local’ entries to your local /etc/hosts file

- OR use the curl ‘–resolve’ flag to specify the FQDN to IP mapping which will send the host header correctly

Here is an example of pulling from the primary and secondary Ingress using entries in the /etc/hosts file.

# validate you have entries to 192.168.1.141 and .142 grep k3s /etc/hosts # check primary ingress $ curl -k https://k3s.local/myhello/ Hello, World request 2 GET /myhello/ Host: k3s.local # check secondary ingress $ curl -k https://k3s-secondary.local/myhello2/ Hello, World request 2 GET /myhello2/ Host: k3s-secondary.local

Conclusion

Using this concept of multiple ingress, you can isolate traffic to different source networks, customers, and services.

Ansible playbook

If you would rather run all the steps from this article using Ansible, you would setup the K3s cluster using my article here. And then you would run:

ansible-playbook playbook_metallb_nginx.yml

Then validate:

./test-nginx-endpoints.sh

REFERENCES

metalLB installation by manifest

metalLB changes from ConfigMap to Object CRD at v1.12, so going to use 1.11 for now

nginx ingress install with manifests

nginx ingress with multiple ingress controllers

kubernetes.github.io, nginx ingress considerations with bare metal and MetalLB

my ansible-role for creating custom ca and its cert cert-with-ca

github k3s-cluster-kvm, installing K3s on cluster of KVM guest hosts

github metallb, issue providing troubleshooting steps for webhook validation, checking caBundle

NOTES

show all the hooks available on validatingwebhook service

$ kubectl get Validatingwebhookconfigurations metallb-webhook-configuration -o=json | jq '.webhooks[].name' addresspoolvalidationwebhook.metallb.io ipaddresspoolvalidationwebhook.metallb.io l2advertisementvalidationwebhook.metallb.io