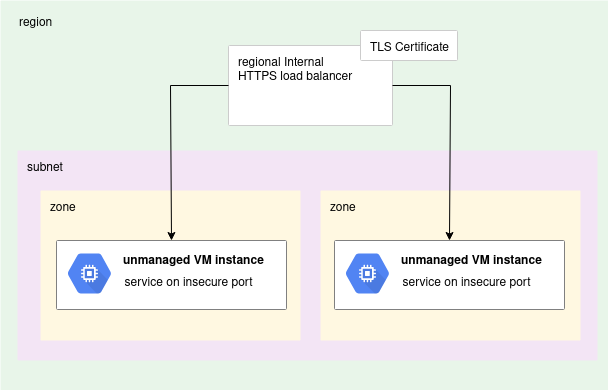

If you have unmanaged GCP VM instances running services on insecure ports (e.g. Apache HTTP on port 80), one way to secure the internal communication coming from other internal pods/apps is to create an internal GCP HTTPS load balancer.

If you have unmanaged GCP VM instances running services on insecure ports (e.g. Apache HTTP on port 80), one way to secure the internal communication coming from other internal pods/apps is to create an internal GCP HTTPS load balancer.

Conceptually, we want to expose a secure front to otherwise insecure services.

While the preferred method would be to secure the client communication natively (easy enough using our Apache example), this is not always an option when dealing with unmanaged VM instances that contain legacy solutions.

This security stance follows the same model one might follow on-premise, where either a commercial load balancer or NGINX/HAProxy is used as a secure front to an insecure backend pool of VM.

Solution Overview

Our ultimate goal is to take two VM instances that only speak on insecure ports (Apache on port 80), and expose their services instead on a GCP Internal HTTPS loadbalancer using HTTPS on port 443.

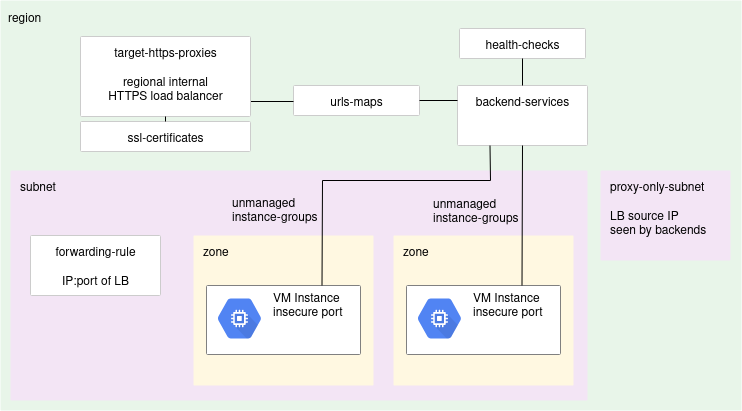

This can be accomplished by creating a backing service with unmanaged instance groups for VMs in each zone as shown below.

We then create and wire together the health-checks, backend-services, url-maps, target-https-proxies, and forwarding rule objects as shown in the diagram.

The rest of the this article will detail the creation of these objects.

GCP network prerequisites

There are a couple of GCP network prerequisites for an internal HTPS load balancer:

- Firewall rule to allow health checks

- Proxy-only subnet created in network

Firewall rule for health checks

As noted in the Google documentation, the health checks will come from two Google source IP ranges: 35.191.0.0/16 or 130.211.0.0/22.

network_name=mynetwork

gcloud compute firewall-rules create fw-allow-health-checks \

--network=${network_name} \

--action=ALLOW \

--direction=INGRESS \

--source-ranges=35.191.0.0/16,130.211.0.0/22 \

--target-tags=allow-health-checks \

--rules=tcp

The VMs in this article “apache1-10-0-90-0” and “apache2-10-0-90-0” are both tagged with “allow-health-checks” to participate in this firewall rule.

gcloud compute instances add-tags apache1-10-0-90-0 --tags=allow-health-checks --zone=us-east1-b gcloud compute instances add-tags apache2-10-0-90-0 --tags=allow-health-checks --zone=us-east1-c

Here is an example of the network firewall being done with Terraform, and a terraform example of tagging VM instances using Terraform and its variables being passed.

Proxy-only subnet

Also required for a regional internal HTTPS load balancer is a subnet whose purpose is marked either with “INTERNAL_HTTPS_LOAD_BALANCER” or “REGIONAL_MANAGED_PROXY”.

This proxy-only subnet must be at least size /26. The example command below creates it in “mynetwork”, which is the same network where the VMs are placed.

proxy_only_subnet_name=https-lb-only-subnet region=us-east1 network_name=mynetwork cidr_range="10.0.70.0/26" gcloud compute networks subnets create $proxy_only_subnet_name \ --purpose=INTERNAL_HTTPS_LOAD_BALANCER \ --role=ACTIVE \ --region=$region \ --network=$network_name \ --range=$cidr_range

I have an example of doing this with a Terraform module.

Unmanaged VM instances running insecure service

For this article, we will have two unmanaged VM instance running Apache on port 80. These VMs are named:

- apache1-10-0-90-0 running on us-east1-a

- apache2-10-0-90-0 running on us-east2-b

Create unmanaged instance groups for the VMs in each zone, with a named port on 80.

project_id=$(gcloud config get project)

region=us-east1

instance_group_prefix=lb-ig

# puts object into region

location_flag="--region=us-east1"

ig_name=${instance_group_prefix}-a

gcloud compute instance-groups unmanaged create $ig_name --zone=${region}-a

gcloud compute instance-groups unmanaged set-named-ports $ig_name --named-ports=http:80 --zone=${region}-a

gcloud compute instance-groups unmanaged add-instances $ig_name --instances=apache1-10-0-90-0 --zone=${region}-a

ig_name=${instance_group_prefix}-b

gcloud compute instance-groups unmanaged create $ig_name --zone=${region}-b

gcloud compute instance-groups unmanaged set-named-ports $ig_name --named-ports=http:80 --zone=${region}-b

gcloud compute instance-groups unmanaged add-instances $ig_name --instances=apache2-10-0-90-0 --zone=${region}-b

Health check

Create a health check for the backend-service.

healthcheck_name=intlb-health gcloud compute health-checks create http $healthcheck_name --host='' --request-path=/index.html --port=80 --enable-logging $location_flag

Backend Service

Create a backend service.

backend_name=intlb-backend gcloud compute backend-services create $backend_name --health-checks=$healthcheck_name --health-checks-region=$region --port-name=http --protocol=HTTP --load-balancing-scheme=INTERNAL_MANAGED --enable-logging --logging-sample-rate=1 $location_flag

Then add its two unmanaged instance group members.

gcloud compute backend-services add-backend $backend_name --instance-group=${instance_group_prefix}-a --instance-group-zone=${region}-a $location_flag

gcloud compute backend-services add-backend $backend_name --instance-group=${instance_group_prefix}-b --instance-group-zone=${region}-b $location_flag

SSL Certificate

Create the TLS certificate that will be used for secure communication. We will use a self-signed cert, but you are free to use a commercial one.

domain=httpslb.fabianlee.org openssl req -x509 -nodes -days 3650 -newkey rsa:2048 \ -keyout /tmp/$FQDN.key -out /tmp/$FQDN.crt \ -subj "/C=US/ST=CA/L=SFO/O=myorg/CN=$FQDN"

Load the certificate into GCP.

gcloud compute ssl-certificates create lbcert1 --certificate=/tmp/$domain.crt --private-key=/tmp/$domain.key --project=$project_id $location_flag

URL Map

The URL map directs traffic based on the request. The name of the URL map object is what the cloud console shows as the load balancer name.

lb_name=intlb-lb1 gcloud compute url-maps create $lb_name --default-service=$backend_name $location_flag

Target HTTPS Proxies

This is the actual proxy-based load balancer entity.

target_https_proxy_name=intlb-target-https-proxy gcloud compute target-https-proxies create $target_https_proxy_name --url-map-region=$region --url-map $lb_name --ssl-certificates-region=$region --ssl-certificates=lbcert1 $location_flag

Forwarding Rule

Finally, you must create the forwarding rule that exposes an IP address and port for the load balancer. A forwarding rule can only expose a single port.

fwd_rule_name=intlb-frontend gcloud compute forwarding-rules create $fwd_rule_name --subnet-region=$region --load-balancing-scheme=INTERNAL_MANAGED --subnet=$subnet_name --network=$network_name --ports=443 --target-https-proxy=$target_https_proxy_name --target-https-proxy-region=$region --service-label=int $location_flag

The service-label creates an internal DNS entry for the load balancer of the syntax:

echo "DNS: int.${fwd_rule_name}.il7.${region}.lb.${project_id}.internal"

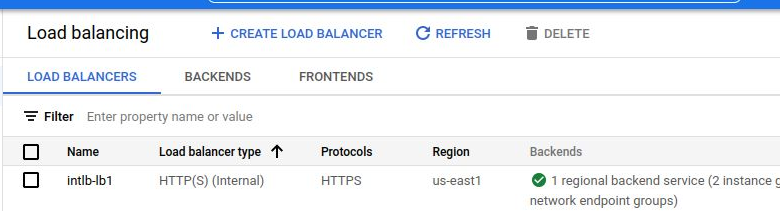

Cloud Console UI

Here is the view from the cloud console.

Script

If you want a full example, see my create-https-lb.sh on github.

REFERENCES

google ref, L7 Internal HTTPS LB overview with diagrams

google ref, lb healthcheck source ranges needed for firewall rules

stackoverflow, creating https lb with diagram

google, google loadbalancing health checks

google, creating LB health checks

google, health check concepts described source ranges needed in firewall rules

chainerweb.com, loadbalancer and instancegroups for GCP lb with terraform

google, writing cloud functions

google, serverless NEG for cloud functions

google ref, gcloud unmanaged instance groups

google ref, gcloud backend-services

nbtechsolutions, gcp https lb with gcloud

xfthhxk, GCP external load balancer with gcloud for App Engine

realkinetic blog, GCP external loadbalancer with managed VM instances and gcloud

google ref, proxy-only subnet diagram

stackoverflow, good answer describing GCP LB constructs