Updated Aug 2023: using the latest K3s 1.27, MetalLB 0.13.9, and NGINX v1.8.1

Updated Aug 2023: using the latest K3s 1.27, MetalLB 0.13.9, and NGINX v1.8.1

K3s is a lightweight Kubernetes deployment by Rancher that is fully compliant, yet also compact enough to run on development boxes and edge devices.

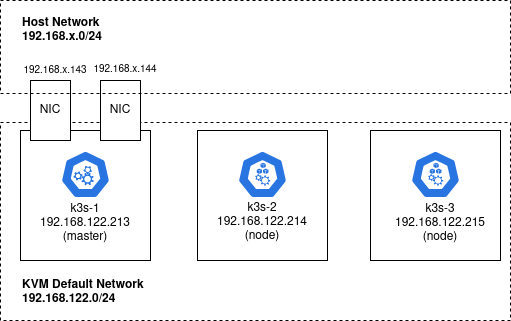

In this article, I will show you how to deploy a three-node K3s cluster on Ubuntu nodes that are created using Terraform and a local KVM libvirt provider. Ansible is used for installation of the cluster.

Creating node VMs

We will deploy this K3s cluster on three independent guests running Ubuntu.

These Ubuntu VMs could actually be created using any hypervisor or hyperscaler, but for this article we will use Terraform and the local KVM libvirt provider to create guests named: k3s-1, k3s-2, k3s-3.

Prerequisites

- KVM/libvirt

- Terraform and the libvirt provider

- Ansible

- Create a ‘br0’ host bridge and KVM ‘host-bridge’ for additional NIC on k3s-1

Create K3S guest OS on KVM

Use my github scripts to create the three Ubuntu guest OS.

# required packages sudo apt install make git curl -y # github project with terraform to create guest OS git clone https://github.com/fabianlee/k3s-cluster-kvm.git cd k3s-cluster-kvm # set first 3 octets of your host br0 network # this is where MetalLB endpoints .143, .144 will be created sed -i 's/metal_lb_prefix: .*/metal_lb_prefix: 192.168.1/' group_vars/all # install OS packages and download galaxy collections ansible-playbook install_dependencies.yml # create ssh keypair for guest VM login as 'ubuntu' cd tf-libvirt ssh-keygen -t rsa -b 4096 -f id_rsa -C tf-libvirt -N "" -q cd .. # ensure that KVM default storage pool has group permissions pool_dir=$(virsh pool-dumpxml default | grep -Po '<path>\K[^<]+') sudo chgrp -R libvirt $pool_dir sudo chmod g+rws $pool_dir # check permissions of dir and files sudo ls -ld $pool_dir sudo ls -l $pool_dir # invoke terraform apply ansible-playbook playbook_terraform_kvm.yml

The KVM guests can be listed using virsh. I have embedded the IP address in the libvirt domain name to make the address obvious.

# should show three running K3s VMs $ export LIBVIRT_DEFAULT_URI=qemu:///system $ virsh list Id Name State -------------------------------------------- ... 10 k3s-1-192.168.122.213 running 11 k3s-2-192.168.122.214 running 12 k3s-1-192.168.122.215 running

cloud-init has been used to give the ‘ubuntu’ user an ssh keypair for login, which allows us to validate the login for each host using the command below.

# accept key as known_hosts for octet in $(seq 213 215); do ssh-keyscan -H 192.168.122.$octet >> ~/.ssh/known_hosts; done # test ssh into remote host for octet in $(seq 213 215); do ssh -i tf-libvirt/id_rsa ubuntu@192.168.122.$octet "hostname -f; uptime"; done

K3s cluster installation overview

As discussed in detail in my other article where the K3s cluster is deployed manually, K3s is first installed on the primary guest VM which generates a node token.

Then K3s is run on the additional worker nodes using the master IP address and node token as parameters so they join the Kubernetes cluster.

The first guest ‘k3s-1’ will serve as the master, with k3s-2 and k3s-3 joining the Kubernetes cluster.

Ansible configuration

In order for Ansible to do its work, we need to inform it of the inventory of guest VMs available and their group variables. Then we use an Ansible playbook to run the specific tasks and roles.

Ansible inventory

The available hosts as well as their group memberships are defined in ‘ansible_inventory.ini’.

$ cat ansible_inventory.ini ... # K3s 'master' [master] k3s-1 # K3s hosts participating as worker nodes [node] k3s-2 k3s-3 # all nodes in K3s cluster [k3s_cluster:children] master node ...

Ansible group variables

The group variables are found in the ‘group_vars’ directory.

# list of group variables $ ls group_vars all master # show variables applying to every host # notice that 'servicelb' and 'traefik' are disabled # which allows us to pick MetalLB and custom ingress later cat group_vars/all # variables applying to just master cat group_vars/master

External Ansible playbook for k3s cluster

The playbook we use for K3s cluster execution executes a set of roles against the groups defined in the ansible_inventory. The primary role we leverage is k3s-ansible, which comes directly from the k3s-io team.

$ cat playbook_k3s.yml

- hosts: master

become: yes

roles:

- role: k3s/master

- hosts: node

become: yes

roles:

- role: k3s/node

...

We surround this with prerequisites as well as post-configuration steps to apply additional NIC to the master, generate certificates, and finally installing ‘k9s’ as a graphical utility for Kubernetes management.

Install K3s cluster using Ansible

We can now have Ansible install the K3s cluster on our three guest VMs by invoking the playbook.

# check permissions on default data dir, # AND establish sudo now, before next set of commands (to avoid prompt) sudo ls -l $pool_dir # run prerequisites, cluster deployment, post configuration ansible-playbook playbook_k3s_prereq.yml ansible-playbook playbook_k3s.yml ansible-playbook playbook_k3s_post.yml

Validate Kubernetes deployment to cluster

As a quick test of the Kubernetes cluster, create a test deployment of nginx. Then check the pod status from each of the cluster nodes.

# deploy from k3s-1, deploys to entire cluster $ ssh -i tf-libvirt/id_rsa ubuntu@192.168.122.213 "sudo kubectl create deployment nginx --image=nginx" deployment.apps/nginx created # show pod deployment $ ssh -i tf-libvirt/id_rsa ubuntu@192.168.122.213 "sudo kubectl get pods -l app=nginx" NAME READY STATUS RESTARTS AGE nginx-6799fc88d8-txcnh 1/1 Running 0 2m58s

Validate remotely using kubectl

The playbook_k3s_post.yml contains a role named ‘k3s-get-kubeconfig-local’ that copies the remote kubeconfig to a local file named ‘/tmp/k3s-kubeconfig’.

So if you have kubectl installed on your host VM, you can also query the Kubernetes cluster remotely.

$ kubectl --kubeconfig /tmp/k3s-kubeconfig get pods -l app=nginx NAME READY STATUS RESTARTS AGE nginx-6799fc88d8-txcnh 1/1 Running 0 4m24s

REFERENCES

k3s installation official docs

computingforgeeks, installing k3s on ubuntu

liquidweb.com, installing k3s on ubuntu

niveditacoder, installing k3s on ubuntu

osradar, installing k3s on ubuntu20

github collabnix, installing k3s on ubuntu18

ivankrizsan.se, traefik ingress example on k3s

longhorn docs, distributed filesystem

dmacvicar, terraform local libvirt provider

Loic Fache, whoami example deployment on traefik

metallb, k3s klipper must be disabled if you want to use MetalLB

rancher, k3s without traefik and with nginx ingress

suse, k3s without traefik and with nginx ingress

unixorn, k3s without traefik on ARM

mpolednik, k3s on ARM with nginx and metalLB

kubernetes, nginx ingress bare metal with NodePort (used by k3s)

NOTES

Enable verbosity for Ansible playbook

ANSIBLE_DEBUG=true ANSIBLE_VERBOSITY=4 ansible-playbook playbook_k3s.yml