Update Sep 2023: tested on Kubeadm 1.28, MetalLB v0.13.9, ingress-nginx v1.8.1

Update Sep 2023: tested on Kubeadm 1.28, MetalLB v0.13.9, ingress-nginx v1.8.1

Kubeadm is a tool for quickly bootstrapping a minimal Kubernetes cluster.

In this article, I will show you how to deploy a three-node Kubeadm cluster on Ubuntu 22 nodes that are created using Terraform and a local KVM libvirt provider. Ansible is used for installation of the cluster.

Overview

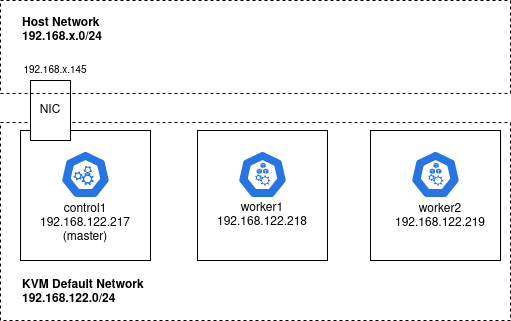

We will deploy this kubeadm cluster on three independent guest VMs from our local KVM hypervisor.

These Ubuntu VMs could actually be created using any hypervisor or hyperscaler, but for this article we will use Terraform and the local KVM libvirt provider to create guests named: control1, worker1, and worker2.

The master “control1” guest VM has an additional network interface on the same network as the host, where our deployments will be exposed via MetalLB and ingress-nginx.

Prerequisites

- Install Ansible on your Ubuntu host

- Install KVM on your Ubuntu host

- Install Terraform and its libvirt provider

- Create a ‘br0’ host bridge and KVM ‘host-bridge’ so that an additional NIC can be provisioned as explained in my previous article

Download project

Download my kubeadm-cluster-kvm github project for the supporting scripts.

# required packages sudo apt install make git curl -y # github project with terraform to create guest OS git clone https://github.com/fabianlee/kubeadm-cluster-kvm.git cd kubeadm-cluster-kvm

Create ssh keypair

The guest VMs created will need a public/private keypair, generate with the commands below.

# make ssh keypair for guest VM login as 'ubuntu' cd tf-libvirt ssh-keygen -t rsa -b 4096 -f id_rsa -C tf-libvirt -N "" -q cd ..

Install host prerequisites

The host needs a base set of OS packages from apt, Python pip modules, and Ansible galaxy modules.

./install_requirements.sh

Create KVM guest VM instances

Using Terraform and the local libvirt provider, we will now create the local Ubuntu Guest VMs: control1, worker1, worker2 in the default KVM network 192.168.122.0/24.

ansible-playbook playbook_terraform_kvm.yml

As an example of gaining ssh access to the ‘control1’ guest VM instance, you can use the following command.

ssh -i tf-libvirt/id_rsa ubuntu@192.168.122.217 "uptime"

Prepare guest VM instances

Each guest VM needs to be configured with a container runtime, OS settings, kubeadm/kubelet binaries, etc. to prepare for the kubeadm deployment.

ansible-playbook playbook_kubeadm_dependencies.yml

Deploy kubeadm

Prepare each guest VM with the proper OS settings and packages.

ansible-playbook playbook_kubeadm_prereq.yml

Install the kubeadm master nodes on the ‘control1’ guest VM instance.

ansible-playbook playbook_kubeadm_controlplane.yml

Then have the ‘worker1’ and ‘worker2’ guest VM instances join the master control plane.

ansible-playbook playbook_kubeadm_workers.yml

As part of the playbooks above, the kubeconfig file for the cluster has been downloaded to the host at “/tmp/kubeadm-kubeconfig”, and you can test access to the kube api endpoint on control1:6443 using the following commands.

export KUBECONFIG=/tmp/kubeadm-kubeconfig # wait until all pods are in 'Running' state kubectl get pods -A

Deploy MetalLB and NGINX Ingress

To expose services from this cluster, we will use MetalLB to expose the additional NIC (.145) from ‘control1’.

ansible-playbook playbook_metallb.yml

Then deploy ingress-nginx to expose the service. But first we load the ‘tls-credential’ secret that provides a certificate+key for TLS communication to https://kubeadm.local

ansible-playbook playbook_certs.yml ansible-playbook playbook_nginx_ingress.yml

Wait for the nginx admission and controller pods to be ready, then verify that MetalLB has provided the NGINX Ingress with the .145 IP address by pulling the LB status. This can take a few minutes to populate.

# wait for admission to be 'Completed', controller 'Running'

kubectl get pods -n ingress-nginx

# wait for non-empty IP address

kubectl get service -n ingress-nginx ingress-nginx-controller -o jsonpath="{.status.loadBalancer.ingress}"

Deploy hello world service

With the Cluster, MetalLB load balancer, and nginx Ingress in place, we are now prepared to create and expose our hello world service.

ansible-playbook playbook_deploy_myhello.yml

To test this service from inside the container itself, use the wget binary that is contained in the image.

# wait until deployment is ready $ kubectl get deployment golang-hello-world-web -n default # test from inside container $ kubectl exec -it deployment/golang-hello-world-web -- wget -q -O- http://localhost:8080/myhello/ Hello, World request 1 GET /myhello/ Host: localhost:8080

Test hello world service

To test from outside the container, we use the additional NIC (.145) located on ‘control1’. This IP address is located on the same network as our host, and MetalLB has given it to the NGINX Ingress.

lb_ip=$(kubectl get service -n ingress-nginx ingress-nginx-controller -o jsonpath="{.status.loadBalancer.ingress[].ip}")

# test without needing DNS

$ curl -k --resolve kubeadm.local:443:${lb_ip} https://kubeadm.local:443/myhello/

Hello, World

request 1 GET /myhello/

Host: kubeadm.local

# OR test by adding DNS to local hosts file

echo ${lb_ip} kubeadm.local | sudo tee -a /etc/hosts

# use k flag because custom CA cert is not trusted at OS level

$ curl -k https://kubeadm.local/myhello/

Hello, World

request 2 GET /myhello/

Host: kubeadm.local

REFERENCES

digitalocean.com, kubeadm on Ubuntu focal with Ansible

linuxconfig.org, kubernetes on ubuntu 20.04 focal

pyongwonlee, kubeadm on ubuntu 20.04 with metrics server

kubernetes.io, ref configuring cgroupfs driver for kubeadm

kubernets.io, ref on supported runtimes

stackoverflow, flannel RBAC enabled only needs single manifest on newer versions

newbdev.com, change docker cgroupfs driver

fabianlee github, kubeadm-cluster-kvm project with Ansible script

computingforgeeks.com, cri-socket flag could be used in kubeadm init to force CRI

NOTES

kubectl to report on underlying CRI

# if docker '/var/run/dockershim.sock'

# if containerd '/var/run/containerd/containerd.sock'

kubectl get nodes -o=jsonpath="{range .items[*]}{.metadata.annotations.kubeadm\.alpha\.kubernetes\.io/cri-socket}{'\n'}{end}"

Use kubeadm to show underlying CRI

kubeadm config print init-defaults | grep criSocket