At some point, there will be a system change significant enough that a maintenance window needs to be scheduled with customers. But that doesn’t mean the end-user traffic or client integrations will stop requesting the services.

At some point, there will be a system change significant enough that a maintenance window needs to be scheduled with customers. But that doesn’t mean the end-user traffic or client integrations will stop requesting the services.

What we need to present to end-users is a maintenance page during this outage to indicate the overall solution is not available.

An in-cluster service could be appropriate if this change would not cause cluster-wide or service-level interruptions. But if the availability cannot be ensured, then one way to address this is by rerouting all traffic from the HTTPS LB to an independent Cloud Run app or Cloud Function that delivers a maintenance page and code.

We assume in this article that the GKE cluster is using Anthos Service Mesh/Istio and fronted by a public GCP HTTPS LoadBalancer created by an Ingress object. The solution in this article can use either an independent Cloud Run app or Cloud Function service to create a serverless NEG that can be swapped at the target-https-proxies level as illustrated below.

LoadBalancer Solution Overview

A GCP global external HTTP Load Balancer is composed of multiple resources as described in the documentation.

In order to to capture all incoming requests to our public GCP HTTPS LB and direct them to our maintenance Cloud Run/Function, we will modify the existing taget-https-proxies object. Instead of pointing at the urls-maps object of your GKE cluster, will will instead point it at the url-maps object of the maintenance backend service as shown below.

You may question why we are modifying at this level, and not instead modifying the url-maps default service OR updating the membership or capacity/rate levels of the backend-services to make this change.

We could make this change by instead modifying the existing url-maps default service, but non-trivial production configurations have additional path-matcher and host rules that would need to be removed. This introduces complexity.

However, we could not make this change at the backend-services level because the backend-services for a serverless neg cannot have a health check. Meanwhile, the backend-services for an unmanaged instance group or regular neg does require a health-check. So even if we wanted to do the heavy work of shifting membership and rates/capacity of the backend service, this solution is not technically viable.

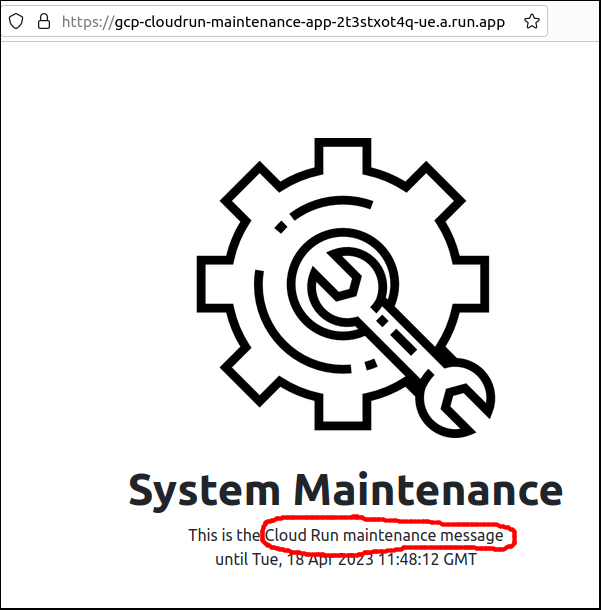

Independent backend #1, Cloud Run maintenance app

The first independent maintenance application we will create is a Python-based Cloud Run application.

# grab project code git clone https://github.com/fabianlee/gcp-cloudrun-maintenance-app.git cd gcp-cloudrun-maintenance-app # deploy and do test curl $ ./deploy_to_cloudrun.sh ... Cloud Run URL: https://gcp-cloudrun-maintenance-app-2t3stxot4q-ue.a.run.app ...

From a browser, the Cloud Run app looks like below.

With the deployment proven, we create a regional Network Endpoint Group from the Cloud Run application that allows us to create a backend-service and url-map.

# show Cloud Run apps

gcloud run services list

# setup variables

app_name=gcp-cloudrun-maintenance-app

# get region of Cloud Run app

region=$(gcloud run services list --format="value(metadata.labels)" --filter="metadata.name = $app_name" | grep -Po "location=\K.*")

gcloud run services describe $app_name --region=$region

# create regional serverless NEG from Cloud Run app

gcloud compute network-endpoint-groups create ${app_name}-neg --region=$region --network-endpoint-type=serverless --cloud-run-service=$app_name

gcloud compute network-endpoint-groups list

# backend is global to match global GCP LB

gcloud compute backend-services create ${app_name}-backend --load-balancing-scheme=EXTERNAL --global

gcloud compute backend-services add-backend ${app_name}-backend --global --network-endpoint-group=${app_name}-neg --network-endpoint-group-region=$region

# show backend

gcloud compute backend-services list --global

gcloud compute backend-services describe ${app_name}-backend --global --format=json

# create global url-map (shows up as load balancer name in console web UI)

gcloud compute url-maps create ${app_name}-lb1 --default-service=${app_name}-backend --global

gcloud compute url-maps add-path-matcher ${app_name}-lb1 --path-matcher-name=${app_name}-hostmatcher --path-rules="/*=${app_name}-backend" --default-service=${app_name}-backend --global

gcloud compute url-maps describe ${app_name}-lb1

# get url-map link

cloud_run_url_map_link=$(gcloud compute url-maps describe ${app_name}-lb1 --format="value(selfLink)")

echo "cloud_run_url_map_link = $cloud_run_url_map_link"

This ‘cloud_run_url_map_link’ is the value we will later substitute into the target-https-proxies to swap the load balancer traffic.

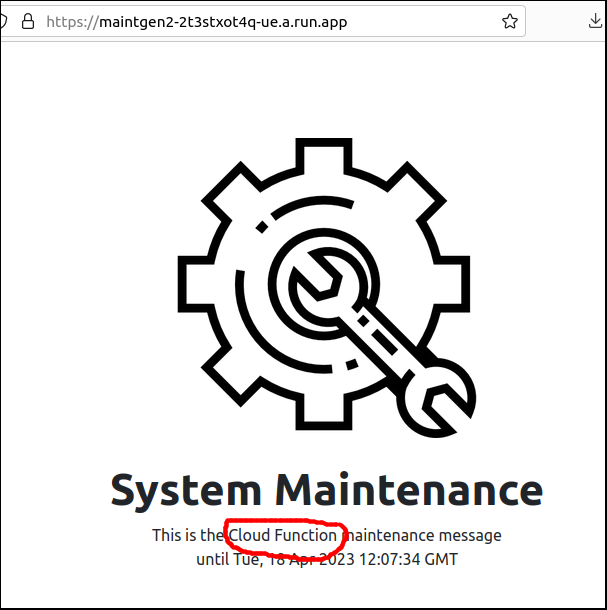

Independent backend #2, Cloud Function maintenance service

The second independent maintenance service we will create is a Python-based Cloud Function.

# get project code git clone https://github.com/fabianlee/gcp-https-lb-vms-cloudfunc.git cd gcp-https-lb-vms-cloudfunc cd roles/gcp-cloud-function-gen2/files # deploy and do test curl $ ./deploy_to_cloud_function.sh ... Cloud Function at: https://maintgen2-2t3stxot4q-ue.a.run.app ...

From a browser, the Cloud Function looks like below.

With the deployment proven, we create a regional Network Endpoint Group from the Cloud Function that allows us to create a backend-service and url-map.

# show Cloud Functions

gcloud functions list

# notice Function also has entry in Cloud Run list

gcloud run services list

# setup variables

app_name=maintgen2

# get region of Cloud Function, available from its Cloud Run object

region=$(gcloud run services list --format="value(metadata.labels)" --filter="metadata.name = $app_name" | grep -Po "location=\K[^;]*")

gcloud functions describe $app_name --region=$region

# create regional serverless NEG from Cloud Function

gcloud compute network-endpoint-groups create ${app_name}-neg --region=$region --network-endpoint-type=serverless --cloud-function-name=$app_name

gcloud compute network-endpoint-groups list

# backend is global to match global GCP LB

gcloud compute backend-services create ${app_name}-backend --load-balancing-scheme=EXTERNAL --global

gcloud compute backend-services add-backend ${app_name}-backend --global --network-endpoint-group=${app_name}-neg --network-endpoint-group-region=$region

# show backend

gcloud compute backend-services list --global

gcloud compute backend-services describe ${app_name}-backend --global --format=json

# create global url-map (shows up as load balancer name in console web UI)

gcloud compute url-maps create ${app_name}-lb1 --default-service=${app_name}-backend --global

gcloud compute url-maps add-path-matcher ${app_name}-lb1 --path-matcher-name=${app_name}-hostmatcher --path-rules="/*=${app_name}-backend" --default-service=${app_name}-backend --global

gcloud compute url-maps describe ${app_name}-lb1

# get url-map link

cloud_function_url_map_link=$(gcloud compute url-maps describe ${app_name}-lb1 --format="value(selfLink)")

echo "cloud_function_url_map_link = $cloud_function_url_map_link"

This ‘cloud_function_url_map_link’ is the value we will later substitute into the target-https-proxies to swap the load balancer traffic.

Swap url-maps to change Load balancer traffic during maintenance

Save current Load Balancer settings

The first step is to save the current url-maps link, so traffic can be re-routed after the maintenance period is over.

# view Ingress objects deployed to GKE cluster

kubectl get Ingress -A

# select the 'Ingress' object representing your target LB

k8s_ingress_ns=<namespaceOfIngress>

k8s_ingress_name=<nameOfIngress>

kubectl get ingress $k8s_ingress_name -n $k8s_ingress_ns

# show load balancer used by cluster Ingress

lb_name=$(kubectl get ingress -n $k8s_ingress_ns $k8s_ingress_name -o=custom-columns=LB:".metadata.annotations.ingress\.kubernetes\.io/url-map" --no-headers)

lb_ip_name=$(kubectl get ingress $k8s_ingress_name -n $k8s_ingress_ns -o=jsonpath="{.metadata.annotations.kubernetes\.io/ingress\.global-static-ip-name}")

echo "cluster is using GCP LB name/IP name: $lb_name/$lb_ip_name"

gcloud compute url-maps list

gcloud compute url-maps describe $lb_name

# show target-proxies used by LB

target_proxy_name=$(kubectl get ingress -n $k8s_ingress_ns $k8s_ingress_name -o=custom-columns=LB:".metadata.annotations.ingress\.kubernetes\.io/https-target-proxy" --no-headers)

echo "cluster is using target proxy: $target_proxy_name"

gcloud compute target-https-proxies describe $target_proxy_name

# show url-map used by LB

saved_url_map=$(gcloud compute target-https-proxies describe $target_proxy_name --format="value(urlMap)")

echo "saved_url_map = $saved_url_map"

This ‘saved_url_map’ value is the one we will replace in the target-https-proxies when the maintenance period is over.

Disable Kubernetes Ingress to GCP synchronization

The Kubernetes Ingress object periodically synchronizes its expected values to the GCP LoadBalancer. If this is left enabled it will revert the changes we are making to the underlying target-https-proxies, so we need to pause this synchronization process.

The easiest way to do this is to intentionally “break” it by setting an incorrect value in the Ingress annotations. This will halt the Ingress synchronization.

echo "existing name of global LB IP is: $lb_ip_name" # overwrite annotation with non-existent name kubectl annotate --overwrite ingress $k8s_ingress_name -n $k8s_ingress_ns 'kubernetes.io/ingress.global-static-ip-name'=DOESNOTEXIST # will now say 'Error syncing to GCP', which is what we want kubectl describe ingress $k8s_ingress_name -n $k8s_ingress_ns --show-events | grep Error

I have chosen to use the global-static-ip-name annotation, but any of the standard annotations would work.

Swap in traffic from Cloud Run application

# swap in Cloud Run url-map gcloud compute target-https-proxies update $target_proxy_name --url-map="$cloud_run_url_map_link" --global

In 60-240 seconds, you will see several blips in availability as the swap is made to the GCP resources, and then all traffic to the external HTTPS LB will be served by the Cloud Run application.

Swap in traffic from Cloud Function service

In the same manner as above, the traffic can be rerouted to the url-maps of our Cloud Function.

# swap in Cloud Function url-map gcloud compute target-https-proxies update $target_proxy_name --url-map="$cloud_function_url_map_link" --global

In 60-240 seconds, you will see several blips in availability as the swap is made to the GCP resources, and then all traffic to the external HTTPS LB will be served by the Cloud Function.

Restore original Load Balancer settings

When the scheduled maintenance period is over, and the GKE cluster and services are ready to serve traffic again, the original url-maps link can be swapped back in and Ingress synchronization re-enabled by correcting the annotation value.

# restore original url-map gcloud compute target-https-proxies update $target_proxy_name --url-map="$saved_url_map" --global # resume normal Ingress synchronization by correcting the annotation kubectl annotate --overwrite ingress $k8s_ingress_name -n $k8s_ingress_ns 'kubernetes.io/ingress.global-static-ip-name'=$lb_ip_name

In 60-240 seconds, you will see several blips in availability as the swap is made to the GCP resources, and then traffic will be restored to the GKE cluster and the Anthos Service Mesh.

REFERENCES

fabianlee github, Python Flask/gunicorn Cloud Run maintenance app

fabianlee github, Python Cloud Function maintenance app

google codelabs, example code for Cloud Run

google ref, gcloud run services describe

google ref, Artifact registry and creating docker repo

google ref, Python runtimes available

google ref, Cloud Run with sample Python

google ref, Cloud Run troubleshooting

google ref, Cloud Run building containers

google ref, balancing modes (conn,rate,utilization)

google ref, external HTTPS LB for Cloud Functions

google ref, serverless neg concepts and diagrams

google ref, external HTTPS LB overview

google ref, decision tree for choosing a load balancer type

google ref, external HTTPS LB architecture explanation and diagram

google ref, target-https-proxies update

google, creating LB health checks

Kishore Jagganath, nuts and bolts of NEGs