In production data centers, it is not uncommon to have limited public internet access due to security policies. So while running ‘apt-get’ or adding a repository to sources.list is easy in your development lab, you have to figure out an alternative installation strategy because you need a process that looks the same across both development and production.

In production data centers, it is not uncommon to have limited public internet access due to security policies. So while running ‘apt-get’ or adding a repository to sources.list is easy in your development lab, you have to figure out an alternative installation strategy because you need a process that looks the same across both development and production.

For some, building containers or images will satisfy this requirement. The container/image can be built once in development, and transferred as an immutable entity to production.

But for those that use automated configuration management such as Salt/Chef/Ansible/Puppet to layer components on top of a base image inside a restricted environment, there is a need to get binary packages to these guest OS without requiring public internet access.

There are several approaches that could be taken: using an offline repository or a tool such as Synaptic or Keryx or apt-mirror, but in this post I’ll go over using apt-get on an internet connected source machine to download the necessary packages for Apache2, and then running dpkg on the non-connected target machine to install each required .deb package and get a running instance of Apache2.

Note that this solution only addresses the apt packages. If you need to pull down Javascript packages from npm or Python modules from pypi, then you might want to look at my article on using a squid proxy to whitelist specific URL.

Discovering Packages on Internet Connected Source

The first step is to discover the location of the target package and all its dependencies.

To do this, we need to use an internet connected source host that has the same architecture and installed packages as the target host. If we used a source host that already had the package installed, it would produce an empty list.

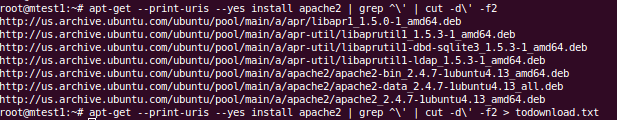

Using apt-get and its –print-uris option, we can parse out the required packages:

apt-get --print-uris --yes install apache2 | grep ^\' | cut -d\' -f2

Per the screenshot below you can see that in addition to the apache2 .deb file, you also have apache-data, apache2-bin, and libaprutil* packages.

And we want to capture these URL to a file, so we should pipe the output of the last command to a file.

apt-get --print-uris --yes install apache2 | grep ^\' | cut -d\' -f2 > todownload.txt

The only thing left is to actually download the packages at these URL, and that can be done with wget

wget --input-file todownload.txt

Transfer .deb files to Unconnected Target Host

Now that we have all these .deb files on the source host, it is really up to your data center specifics how you get them to the unconnected target host.

If you have an internal ftp server accessible to the target host, or want to use scp to transfer the files, that is fine.

If you are using SaltStack, you may place these .deb files in a file_root, and have states that copy them to the proper target hosts.

Install packages on Unconnected Target Host

Now that the various .deb files are located on the unconnected target host in a subdirectory, you can install them using dpkg like:

dpkg -i *.deb

If using SaltStack, you can run dpkg with a cmd.run:

apache2-dpkg-local-install: cmd.run: - name: yes N | dpkg -i /tmp/*.deb

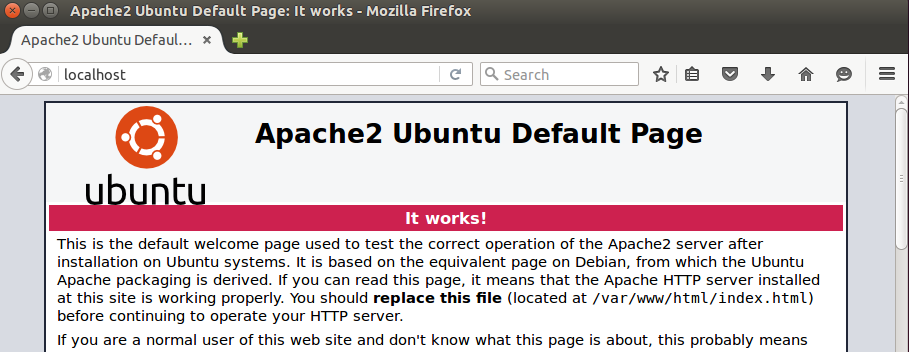

Upon completion, you should be able to access Apache2 by going to http://localhost

REFERENCES

http://www.webupd8.org/2009/11/get-list-of-packages-and-dependencies.html

http://askubuntu.com/questions/974/how-can-i-install-software-or-packages-without-internet-offline

http://askubuntu.com/questions/306971/install-package-along-with-all-the-dependencies-offline