At some point you may need to schedule a maintenance window for your solution But that doesn’t mean the end-user traffic or client integrations will stop requesting the services from the GCP external HTTPS LB that fronts all client requests.

At some point you may need to schedule a maintenance window for your solution But that doesn’t mean the end-user traffic or client integrations will stop requesting the services from the GCP external HTTPS LB that fronts all client requests.

The VM instances and GKE clusters that normally respond to requests may not be able to respond reliably since the maintenance will affect downstream dependencies and possibly involve restarts of critical services.

One way to address this issue is to create an independent gen2 Cloud Function that captures all requests and delivers a maintenance page that indicates the service is unavailable.

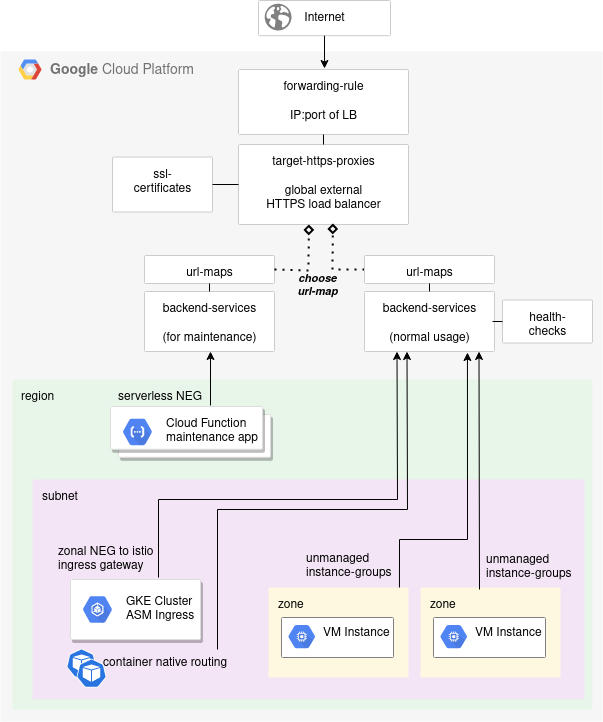

This is done by creating a serverless NEG for the Cloud Function, then updating the existing target-https-proxies to point at the desired url-maps object as shown below.

Solution Overview

In order to to capture all incoming requests to our external HTTPS LB and direct them to our maintenance Cloud Function, we simply need to modify the existing taget-https-proxies object.

Instead of pointing at the urls-maps object of your regular backend-services object, you need to point it at the url-maps object of the maintenance backend service as shown below.

You may question why we are modifying at this level, and not instead modifying the url-maps default service OR updating the membership or capacity/rate levels of the backend-services to make this change.

We could make this change by instead modifying the existing url-maps default service, but non-trivial configurations have additional path-matcher and host rules that would need to be removed. This introduces complexity.

However, we could not make this change at the backend-services level because the backend-services for a serverless neg cannot have a health check. Meanwhile, the backend-services for an unmanaged instance group or regular neg does require a health-check. So even if we wanted to do the heavy work of shifting membership and rates/capacity of the backend service, this solution is not technically viable.

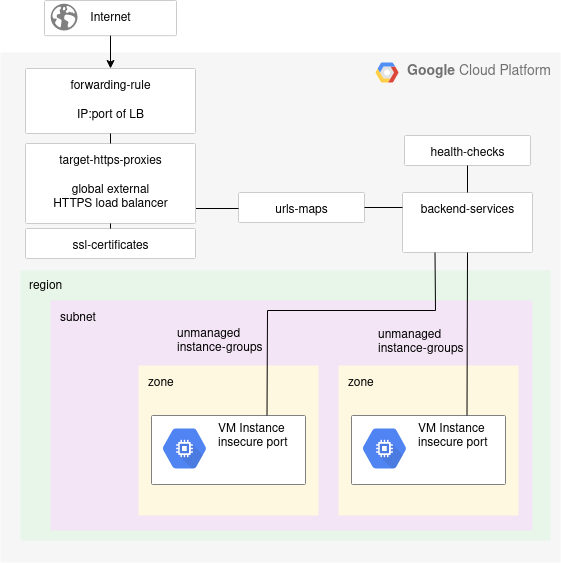

Current Load Balancer deployment

As a prerequisite, implement the steps in my previous article on setting up two Apache VMs as unmanaged instance groups fronted by an external HTTPS LB.

This will give you a taget-https-proxies object named “extlb-target-https-proxy” that points to a url-maps object named “extlb-lb1”.

# points to url-maps object gcloud compute target-https-proxies describe extlb-target-https-proxy --format="value(urlMap)"

The urlMap attribute is what we will change in upcoming sections to point to the maintenance Cloud Function instead.

Current content from LB

Let’s first show the responses currently delivered through the load balancer, which is content coming from the Apache VM instances.

# get IP address of current LB public_ip=$(gcloud compute forwarding-rules describe $lbname --global --format="value(IPAddress)") echo "the IP address for $lbname is $public_ip" # retrieve HTML from Apache curl -k https://public_ip

Which looks like this in the browser.

Deploy Cloud Function maintenance app

What we need now is a new url-maps object that represents the maintenance app Cloud Function.

Grab my project from github, and deploy the ‘maintgen2’ Cloud Function and the necessary supporting LB objects.

# get project code git clone https://github.com/fabianlee/gcp-https-lb-vms-cloudfunc.git cd gcp-https-lb-vms-cloudfunc cd roles/gcp-cloud-function-gen2/files # deploy Cloud Function 'maintgen2' and supporting LB objects ./deploy.sh --do-not-expose # show full details of new backend-services object gcloud compute backend-services describe maintgen2-backend --global # show full details of new urls-maps object gcloud compute url-maps describe maintgen2-lb1 --global

This article is focused on showing you how to swap the url-maps. If you would like a walk-through of the objects and relationships that ‘deploy.sh‘ is creating, I would recommend you read my previous article here.

Swap url-maps to serve maintenance app

Now we make the swap for the maintenance Cloud Function urls-maps object.

gcloud compute target-https-proxies update extlb-target-https-proxy --url-map=maintgen2-lb1 --global

It takes about 2 minutes for the Load Balancer to reconfigure and reflect this change. You can poll using this command.

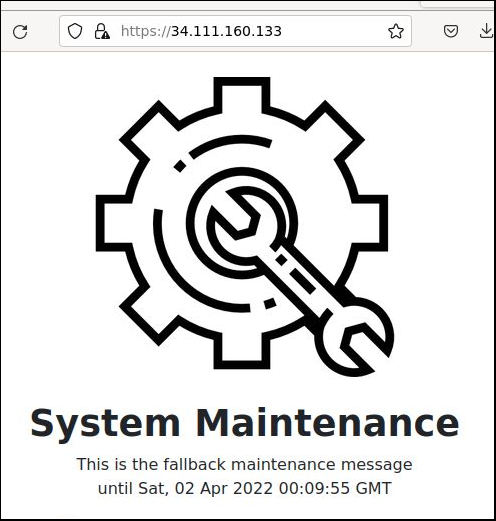

watch -n15 curl -ks https://$public_ip

There will be 5-10 second blip where you get 502 errors as the LB switch is made. When it does happen successfully, you will then see curl returning the maintenance page HTML and purposely a 503 HTTP return code to signify to clients that we are in maintenance mode.

Restore service

Swapping back follows the same logic, we update the target-https-proxies object to point at the original url-maps object.

gcloud compute target-https-proxies update extlb-target-https-proxy --url-map=extlb-lb1 --global

Once again, swapping back to the normal content takes about 2 minutes for the Load Balancer to reconfigure. When it does happen successfully, you will then see curl return a 200 code from the Apache instances again.

watch -n15 curl -ks https://$public_ip

And from the browser, we once again see the content coming from the Apache VM instances.

REFERENCES

google ref, balancing modes (conn,rate,utilization)

google ref, external HTTPS LB for Cloud Functions

google ref, serverless neg concepts and diagrams

google ref, recipe for external HTTPS LB for Cloud Function

google ref, external HTTPS LB overview

google ref, decision tree for choosing a load balancer type

google ref, external HTTPS LB architecture explanation and diagram

google ref, target-https-proxies update

google, creating LB health checks

google ref, gcloud unmanaged instance groups

google ref, gcloud backend-services

xfthhxk, GCP external load balancer with gcloud for App Engine

realkinetic blog, GCP external loadbalancer with managed VM instances and gcloud