microk8s has convenient out-of-the-box support for MetalLB and an NGINX ingress controller. But microk8s is also perfectly capable of handling Istio operators, gateways, and virtual services if you want the advanced policy, security, and observability offered by Istio.

microk8s has convenient out-of-the-box support for MetalLB and an NGINX ingress controller. But microk8s is also perfectly capable of handling Istio operators, gateways, and virtual services if you want the advanced policy, security, and observability offered by Istio.

In this article, we will install the Istio Operator, and allow it to create the Istio Ingress gateway service. We follow that up by creating an Istio Gateway in the default namespace, then create a Deployment and VirtualService projecting unto the Istio Gateway.

To exercise an even more advanced scenario, we will install both a primary and secondary Istio Ingress gateway, each tied to a different MetalLB IP address. This can emulate serving your public customers one set of services, and serving a different set of administrative applications to a private internal network for employees.

This article builds off my previous article where we built a microk8s cluster using Ansible. There are many steps required for Istio setup, so I have wrapped this up into Ansible roles.

Prerequisites

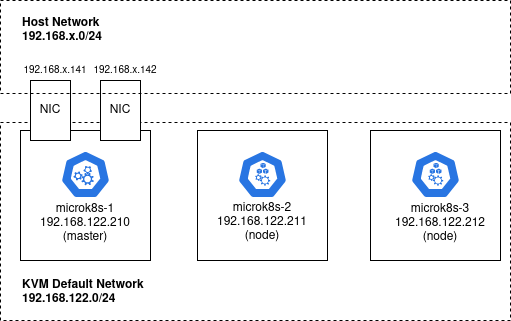

This article builds off my previous article where we built a microk8s cluster using Ansible. If you used Terraform as described to create the microk8s-1 host, you already have an additional 2 network interfaces on the master microk8-1 host (ens4=192.168.1.141 and ens5=192.168.1.142).

However, a microk8s cluster is not required. You can run the steps in this article on a single microk8s node. But you MUST have an additional two network interfaces and IP addresses on the same network as your host (e.g. 192.168.1.0/24) for the MetalLB endpoints.

Istio Playbook

From the previous article, your last step was running the playbook that deployed a microk8s cluster, playbook_microk8s.yml.

We need to build on top of that and install the Istio Operator, Istio ingress gateway Service, Istio Gateway, and test Virtual Service and Deployment. Run this playbook.

ansible-playbook playbook_metallb_primary_secondary_istio.yml

At the successful completion of this playbook run, you will have Istio installed, two Istio Ingress services, two Istio Gateways, and two independent versions of the sample helloworld deployment served up using different endpoints and certificates.

The playbook does TLS validation using curl as a success criteria. However, it is beneficial for learning to step through the objects created and then execute a smoke test of the TLS endpoints manually. The rest of this article is devoted to these manual validations.

MetalLB validation

View the MetalLB objects.

$ kubectl get all -n metallb-system NAME READY STATUS RESTARTS AGE pod/speaker-9xzlc 1/1 Running 0 64m pod/speaker-dts5k 1/1 Running 0 64m pod/speaker-r8kck 1/1 Running 0 64m pod/controller-559b68bfd8-mtl2s 1/1 Running 0 64m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/speaker 3 3 3 3 3 beta.kubernetes.io/os=linux 64m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/controller 1/1 1 1 64m NAME DESIRED CURRENT READY AGE replicaset.apps/controller-559b68bfd8 1 1 1 64m

Show the MetalLB configmap with the IP used.

$ kubectl get configmap/config -n metallb-system -o yaml

apiVersion: v1

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.1.141-192.168.1.142

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: ....

creationTimestamp: "2021-07-31T10:07:56Z"

name: config

namespace: metallb-system

resourceVersion: "38015"

selfLink: /api/v1/namespaces/metallb-system/configmaps/config

uid: 234ad41d-cfde-4bf5-990e-627f74744aad

Istio Operator validation

View the Istio Operator objects in the ‘istio-operator’ namespace.

$ kubectl get all -n istio-operator NAME READY STATUS RESTARTS AGE pod/istio-operator-1-9-7-5d47654878-jh5sr 1/1 Running 1 65m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/istio-operator-1-9-7 ClusterIP 10.152.183.120 8383/TCP 65m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/istio-operator-1-9-7 1/1 1 1 65m NAME DESIRED CURRENT READY AGE replicaset.apps/istio-operator-1-9-7-5d47654878 1 1 1 65m

The Operator should be ‘Running’, now check the Istio Operator logs for errors.

$ kubectl logs --since=15m -n istio-operator $(kubectl get pods -n istio-operator -lname=istio-operator -o jsonpath="{.items[0].metadata.name}")

...

- Processing resources for Ingress gateways.

✔ Ingress gateways installed

...

Istio Ingress gateway validation

View the Istio objects in the ‘istio-system’ namespace. These are objects that the Istio Operator has created.

$ kubectl get pods -n istio-system NAME READY STATUS RESTARTS AGE istiod-1-9-7-656bdccc78-rr8hf 1/1 Running 0 95m istio-ingressgateway-b9b6fb6d8-d8fbp 1/1 Running 0 94m istio-ingressgateway-secondary-76db9f9f7b-2zkcl 1/1 Running 0 94m $ kubectl get services -n istio-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE istiod-1-9-7 ClusterIP 10.152.183.198 15010/TCP,15012/TCP,443/TCP,15014/TCP 95m istio-ingressgateway LoadBalancer 10.152.183.92 192.168.1.141 15021:31471/TCP,80:32600/TCP,443:32601/TCP,31400:32239/TCP,15443:30571/TCP 94m istio-ingressgateway-secondary LoadBalancer 10.152.183.29 192.168.1.142 15021:30982/TCP,80:32700/TCP,443:32701/TCP,31400:31575/TCP,15443:31114/TCP 94m

Notice we have purposely created two istio ingress gateways, one is for our primary access (such as public customer traffic), and the other is to mimic a secondary access (perhaps for employee-only management access).

In the services, you will see reference to our MetalLB IP endpoints which is how we will ultimately reach the services projected unto these gateways.

Service and Deployment validation

Istio has an example app called helloworld. Our Ansible created two independent deployments that could be projected unto the two Istio Gateways.

Let’s validate these deployments by testing access to the pods and services, without any involvement by Istio.

- Service=helloworld, Deployment=helloworld-v1

- Service=helloworld2, Deployment=helloworld-v2

To reach the internal pod and service IP addresses, we need to be inside the cluster itself so we ssh into the master before running these commands:

ssh -i tf-libvirt/id_rsa ubuntu@192.168.122.210

Let’s view the deployments, pods, and then services for these two independent applications.

$ kubectl get deployments -n default NAME READY UP-TO-DATE AVAILABLE AGE helloworld2-v2 1/1 1 1 112m helloworld-v1 1/1 1 1 112m $ kubectl get pods -n default -l 'app in (helloworld,helloworld2)' NAME READY STATUS RESTARTS AGE helloworld2-v2-749cc8dc6d-6kbh7 2/2 Running 0 110m helloworld-v1-776f57d5f6-4gvp7 2/2 Running 0 109m $ kubectl get services -n default -l 'app in (helloworld,helloworld2)' NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE helloworld2 ClusterIP 10.152.183.251 5000/TCP 113m helloworld ClusterIP 10.152.183.187 5000/TCP 113m

First, let’s pull from the private pod IP directly.

# internal ip of primary pod

$ primaryPodIP=$(microk8s kubectl get pods -l app=helloworld -o=jsonpath="{.items[0].status.podIPs[0].ip}")

# internal IP of secondary pod

$ secondaryPodIP=$(microk8s kubectl get pods -l app=helloworld2 -o=jsonpath="{.items[0].status.podIPs[0].ip}")

# check pod using internal IP

$ curl http://${primaryPodIP}:5000/hello

Hello version: v1, instance: helloworld-v1-776f57d5f6-4gvp7

# check pod using internal IP

$ curl http://${secondaryPodIP}:5000/hello

Hello version: v2, instance: helloworld2-v2-749cc8dc6d-6kbh7

With internal pod IP proven out, move up to the Cluster IP defined at the Service level.

# IP of primary service

$ primaryServiceIP=$(microk8s kubectl get service/helloworld -o=jsonpath="{.spec.clusterIP}")

# IP of secondary service

$ secondaryServiceIP=$(microk8s kubectl get service/helloworld2 -o=jsonpath="{.spec.clusterIP}")

# check primary service

$ curl http://${primaryServiceIP}:5000/hello

Hello version: v1, instance: helloworld-v1-776f57d5f6-4gvp7

# check secondary service

$ curl http://${secondaryServiceIP}:5000/hello

Hello version: v2, instance: helloworld2-v2-749cc8dc6d-6kbh7

These validations proved out the pod and service independent of the Istio Gateway or VirtualService. Notice all these were using insecure HTTP on port 5000, because TLS is layered on top by Istio.

Exit the cluster ssh session before continuing.

exit

Validate TLS certs

The Ansible scripts created a custom CA and then key+certificates for “microk8s.local” and “microk8s-secondary.local”. These are located in the /tmp directory of the microk8s-1 host.

These will be used by the Istio Gateway and VirtualService for secure TLS.

# show primary cert info

$openssl x509 -in /tmp/microk8s.local.crt -text -noout | grep -E "CN |DNS"

Issuer: CN = myCA.local

Subject: CN = microk8s.local

DNS:microk8s.local, DNS:microk8s-alt.local

# show secondary cert info

$ openssl x509 -in /tmp/microk8s-secondary.local.crt -text -noout | grep -E "CN |DNS"

Issuer: CN = myCA.local

Subject: CN = microk8s-secondary.local

DNS:microk8s-secondary.local

Validate Kubernetes TLS secrets

The keys and certificates will not be used by Istio unless they are loaded as Kubernetes secrets available to the Istio Gateway.

# primary tls secret for 'microk8s.local'

$ kubectl get -n default secret tls-credential

NAME TYPE DATA AGE

tls-credential kubernetes.io/tls 2 10h

# primary tls secret for 'microk8s-secondary.local'

$ kubectl get -n default secret tls-secondary-credential

NAME TYPE DATA AGE

tls-secondary-credential kubernetes.io/tls 2 10h

# if needed, you can pull the actual certificate from the secret

# it requires a backslash escape for 'tls.crt'

$ kubectl get -n default secret tls-credential -o jsonpath="{.data.tls\.crt}"

| base64 --decode

Validate Istio Gateway

The Istio Gateway object is the entity that uses the Kubernetes TLS secrets shown above.

$ kubectl get -n default gateway NAME AGE gateway-ingressgateway-secondary 3h2m gateway-ingressgateway 3h2m

Digging into the details of the Gateway object, we can see the host name it will be processing as well as the kubernetes tls secret it is using.

# show primary gateway

$ kubectl get -n default gateway/gateway-ingressgateway -o jsonpath="{.spec.servers}" | jq

[

{

"hosts": [

"microk8s.local",

"microk8s-alt.local"

],

"port": {

"name": "http",

"number": 80,

"protocol": "HTTP"

}

},

{

"hosts": [

"microk8s.local",

"microk8s-alt.local"

],

"port": {

"name": "https",

"number": 443,

"protocol": "HTTPS"

},

"tls": {

"credentialName": "tls-credential",

"mode": "SIMPLE"

}

}

]

# show secondary gateway

$ kubectl get -n default gateway/gateway-ingressgateway-secondary -o jsonpath="{.spec.servers}" | jq

[

{

"hosts": [

"microk8s-secondary.local"

],

"port": {

"name": "http-secondary",

"number": 80,

"protocol": "HTTP"

}

},

{

"hosts": [

"microk8s-secondary.local"

],

"port": {

"name": "https-secondary",

"number": 443,

"protocol": "HTTPS"

},

"tls": {

"credentialName": "tls-secondary-credential",

"mode": "SIMPLE"

}

}

]

Notice the first Gateway uses the ‘tls-credential’ secret, while the second uses ‘tls-secondary-credential’.

Validate VirtualService

The bridge that creates the relationship between the purely Istio objects (istio-system/ingressgateway,default/Gateway) and the application objects (pod,deployment,service) is the VirtualService.

This VirtualService is how the application is projected unto a specific Istio Gateway.

$ kubectl get -n default virtualservice NAME GATEWAYS HOSTS AGE hello-v2-on-gateway-ingressgateway-secondary ["gateway-ingressgateway-secondary"] ["microk8s-secondary.local"] 3h14m hello-v1-on-gateway-ingressgateway ["gateway-ingressgateway"] ["microk8s.local","microk8s-alt.local"] 3h14m

Digging down into the VirtualService, you can see it lists the application’s route, port, path, the expected HTTP Host header, and Istio gateway to project unto.

# show primary VirtualService

$ kubectl get -n default virtualservice/hello-v1-on-gateway-ingressgateway -o jsonpath="{.spec}" | jq

{

"gateways": [

"gateway-ingressgateway"

],

"hosts": [

"microk8s.local",

"microk8s-alt.local"

],

"http": [

{

"match": [

{

"uri": {

"exact": "/hello"

}

}

],

"route": [

{

"destination": {

"host": "helloworld",

"port": {

"number": 5000

}

}

}

]

}

]

}

# show secondary VirtualService

$ kubectl get -n default virtualservice/hello-v2-on-gateway-ingressgateway-secondary -o jsonpath="{.spec}" | jq

{

"gateways": [

"gateway-ingressgateway-secondary"

],

"hosts": [

"microk8s-secondary.local"

],

"http": [

{

"match": [

{

"uri": {

"exact": "/hello"

}

}

],

"route": [

{

"destination": {

"host": "helloworld2",

"port": {

"number": 5000

}

}

}

]

}

]

}

Validate URL endpoints

With the validation of all the dependent objects complete, you can now run the ultimate test which is to run an HTTPS against the TLS secured endpoints.

The Gateway requires that the proper FQDN headers be sent by your browser, so it is not sufficient to do a GET against the MetalLB IP addresses. The ansible scripts should have already created entries in the local /etc/hosts file so we can use the FQDN.

# validate that /etc/hosts has entries for URL $ grep '\.local' /etc/hosts 192.168.1.141 microk8s.local 192.168.1.142 microk8s-secondary.local # test primary gateway # we use '-k' because the CA cert has not been loaded at the OS level $ curl -k https://microk8s.local/hello Hello version: v1, instance: helloworld-v1-776f57d5f6-4gvp7 # test secondary gateway $ curl -k https://microk8s-secondary.local/hello Hello version: v2, instance: helloworld2-v2-749cc8dc6d-6kbh7

Notice from the /etc/hosts entries, we have entries corresponding the MetalLB endpoints. The tie between the MetalLB IP addresses and the Istio ingress gateway objects was shown earlier, but for convenience is below.

# tie between MetalLB and Istio Ingress Gateways $ kubectl get -n istio-system services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE istiod-1-9-7 ClusterIP 10.152.183.198 15010/TCP,15012/TCP,443/TCP,15014/TCP 3h30m istio-ingressgateway LoadBalancer 10.152.183.92 192.168.1.141 15021:31471/TCP,80:32600/TCP,443:32601/TCP,31400:32239/TCP,15443:30571/TCP 3h30m istio-ingressgateway-secondary LoadBalancer 10.152.183.29 192.168.1.142 15021:30982/TCP,80:32700/TCP,443:32701/TCP,31400:31575/TCP,15443:31114/TCP 3h30m

Validate URL endpoints remotely

These same request can be made from your host machine as well since the MetalLB endpoints are on the same network as your host (all our actions so far have been from inside the microk8s-1 host). But the Istio Gateway expects a proper HTTP Host header so you have several options:

- Enable DNS lookup from your host upstream (router)

- Add the ‘microk8s.local’ and ‘microk8s-secondary.local’ entries to your local /etc/hosts file

- OR use the curl ‘–resolve’ flag to specify the FQDN to IP mapping which will send the host header correctly

I’ve provided a script that you can run from the host for validation:

./test-istio-endpoints.sh

Conclusion

Using this concept of multiple ingress, you can isolate traffic to different source networks, customers, and services.

REFERENCES

fabianlee github, microk8s-nginx-istio repo

istio, helloworld source for istio

dockerhub, helloworldv1 and helloworldv2 images

rob.salmond.ca, good explanation of Istio ingress gateway versus Istio Gateway and its usage

kubernetes.io, list of different ingress controllers

stackoverflow, diagrams of istiod, istio proxy, and ingress and egress controllers