Updated Aug 2023: tested with Kubernetes 1.25 and ingress-nginx 1.8.1

Updated Aug 2023: tested with Kubernetes 1.25 and ingress-nginx 1.8.1

Creating a Kubernetes cluster on DigitalOcean can be done manually using its web Control Panel, but for automation purposes it is better to use Terraform.

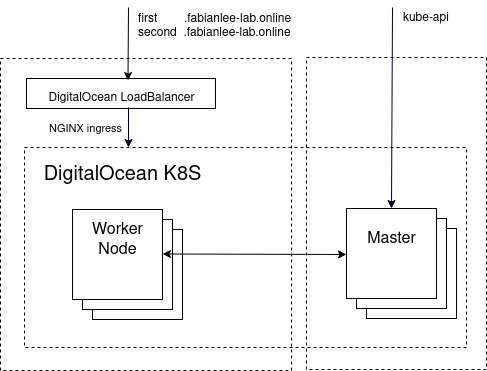

In this article, we will use Terraform to create a Kubernetes cluster on DigitalOcean infrastructure. We will then use helm to deploy an NGINX ingress exposing itself on a public Digital Ocean loadbalancer.

Prerequisite tools

- Install terraform 1.4+

- Install kubectl

- Install helm3

- Install the DigitalOcean CLI tool doctl

I have provided shell scripts for your convenience:

sudo apt install curl git make -y git clone https://github.com/fabianlee/docean-k8s-ingress.git cd docean-k8s-ingress BASEPATH=$(realpath .) cd $BASEPATH/prereq ./install_terraform.sh ./install_kubectl_apt.sh ./install_helm3_apt.sh ./install_doctl.sh

Prerequisite DigitalOcean account

For the Kubernetes and LoadBalancer infrastructure in this article, we will use DigitalOcean. Go ahead and signup for a trial DigitalOcean account, and once you are logged into the control panel, navigate to API > Tokens/Keys

Click the ‘Generate New Token’ button and create a token named “docean1” with read+write privileges. Copy the personal token, because it will only be displayed once.

Then run these commands to establish a login context.

# enter personal token for authentication at prompt doctl auth init --context docean1 # switch to context doctl auth switch --context docean1 # view account info as test doctl account get # list versions of kubernetes available for deployment doctl kubernetes options versions

Create Kubernetes Cluster

The ‘digitalocean_kubernetes_cluster‘ resource in Terraform’s main.tf is responsible for creating the cluster. Use terraform ‘init’, then ‘apply’ to create the single-node cluster.

# export your DigitalOcean personal token for convenience

export DO_PAT=xxxxxxxx

cd $BASEPATH/tf

# download modules

terraform init

# create cluster matching 'k8s_version_prefix' in variables.tf

terraform apply --var "do_token=${DO_PAT}" -auto-approve

This creates the Kubernetes cluster, and also creates the file ‘$BASEPATH/kubeconfig’ so you can use kubectl against the cluster.

cd $BASEPATH # use DigitalOcean CLI to view cluster doctl kubernetes cluster list # kubectl context information kubectl config get-contexts --kubeconfig=$BASEPATH/kubeconfig # set this kubeconfig as default export KUBECONFIG=$BASEPATH/kubeconfig # view additional information kubectl cluster-info kubectl get nodes -o wide

Expose publicly using NGINX ingress

Install the NGINX helm chart

# add helm repo helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx helm repo update # install from helm chart helm show values ingress-nginx/ingress-nginx helm install ingress-nginx ingress-nginx/ingress-nginx --set controller.publishService.enabled=true # verify installation helm list # show ingress-nginx-controller components kubectl get deployment ingress-nginx-controller kubectl get pods -L "app.kubernetes.io/instance=ingress-nginx" # should see ingress-nginx-controller and -admission # EXTERNAL-IP will be 'pending' for ~5 mins, wait until populated kubectl get services # public IP can also be verified using DigitalOcean CLI doctl compute load-balancer list --format Name,IP

Deploy both “hello” services

cd $BASEPATH/k8s

kubectl apply -f first-hello.yaml

kubectl apply -f second-hello.yaml

# will be empty

kubectl get pods

# show pods for first and second hello deployment

kubectl get pods -l "app in (hello-kubernetes-first,hello-kubernetes-second)"

# show first and second hello services

kubectl get services -l "app in (hello-kubernetes-first,hello-kubernetes-second)"

# deploy tiny-tools container for testing from inside cluster

kubectl apply -f tiny-tools.yaml

tiny_pod=$(kubectl get pod -l app=tiny-tools -o jsonpath='{.items[0].metadata.name}')

# do curl direct to first service

kubectl exec $tiny_pod -- curl -sS http://hello-kubernetes-first | grep hello

# do curl direct to second service

kubectl exec $tiny_pod -- curl -sS http://hello-kubernetes-second | grep hello

And then configure the NGINX ingress to deliver the services from the URL ‘first.fabianlee-lab.online’ and ‘second.fabianlee-lab.online’

cd $BASEPATH/k8s

# ingress to deliver from [first|second].fabianlee-lab.online

kubectl apply -f ingress-primary.yaml

# internal cluster IP of ingress for initial testing

INTIP_PRIMARY=$(kubectl get service ingress-nginx-controller -o=jsonpath={'.spec.clusterIP'})

# do curl direct to first service

fqdn="first.fabianlee-lab.online"

# test insecure http

resolveStr="--resolve $fqdn:80:$INTIP_PRIMARY"

kubectl exec $tiny_pod -- curl -sS $resolveStr http://$fqdn | grep hello

# test secure https at internal ingress

# notice that certificate used is self-signed and does not match host

resolveStr="--resolve $fqdn:443:$INTIP_PRIMARY"

kubectl exec $tiny_pod -- curl -kv $resolveStr https://$fqdn 2>&1 | grep -E "subject:|hello"

#

# same internal test could be run on 'second.fabianlee-lab.online'

With the NGINX ingress tested internally, now we need to test from the public DigitalOcean LoadBalancer that has been created.

# public load balancer IP where ingress is exposed

EXTIP_PRIMARY=$(kubectl get svc ingress-nginx-controller -n default -o 'jsonpath={ .status.loadBalancer.ingress[0].ip }')

# add local host entries to support the FQDN

echo "$EXTIP_PRIMARY first.fabianlee-lab.online" | sudo tee -a /etc/hosts

echo "$EXTIP_PRIMARY second.fabianlee-lab.online" | sudo tee -a /etc/hosts

# test insecure http

fqdn=first.fabianlee-lab.online

curl -sS http://$fqdn | grep hello

# test secure https pull at public loadbalancer for first service

# notice that certificate used is self-signed and does not match host

curl -kv https://$fqdn 2>&1 | grep -E "subject:|hello"

# test secure https for second service

fqdn=second.fabianlee-lab.online

curl -kv https://$fqdn 2>&1 | grep -E "subject:|hello"

With this public connectivity proved out, your next steps would be to create public DNS resolution for these FQDN and place a TLS certificate+key matching the hostnames.

In the next article, I will show how to use LetsEncrypt to generate certificates for these ingress.

Teardown DigitalOcean infrastructure

To avoid additional charges from this DigitalOcean infrastructure, have Terraform destroy the Kubernetes cluster, and manually destroy any loadbalancers.

cd $BASEPATH/tf

terraform destroy -var "do_token=${DO_PAT}"

# manually destroy any loadbalancers

doctl compute load-balancer list --format ID,Name,IP

doctl compute load-balancer delete <ID>

# manually destroy any VPC

doctl vpcs list --format ID,Name,IPRange | grep 10.10.10.0

doctl vpcs delete <ID>

# manually destroy any domain

doctl compute domain list

doctl compute domain delete <ID>

REFERENCES

Terraform, digitalocean_kubernetes_cluster

DigitalOcean, setting up nginx ingress using helm

DigitalOcean, how to install software on k8s with helm3 and create custom charts

DigitalOcean, load balancers doc

DigitalOcean, dns01 digitalOcean provider

github docs, using helm3 for ingress-nginx

github docs, multiple instances of ingress-nginx in same cluster

eff.org, deep dive into lets encrypt dns validation

kosyfrances.com, letsencrypt with DNS01 challenge on GKE

cert-manager, using specific dns servers for dns01 solver

NOTES

create ssh key in DigitalOcean using doctl [1]

doctl compute ssh-key list doctl compute ssh-key create id_rsa --public-key="$(cat id_rsa.pub)"

install helm3 from binary package

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 /bin/bash get_helm.sh helm version

using doctl to save kubeconfig

doctl kubernetes cluster list --format ID,Name doctl kubernetes cluster kubeconfig save <clusterID>

scaling up and rolling back scale of helm chart

# scale up using current helm chart values kubectl get pods helm upgrade ingress-nginx ingress-nginx/ingress-nginx --set controller.replicaCount=3 --reuse-values kubectl get pods # rollback to original settings, with one pod helm list helm rollback ingress-nginx 1 kubectl get pods # changing values for installed chart helm upgrade ingress-nginx ingress-nginx/ingress-nginx --set controller.publishService.enabled=true --reuse-values

Testing public ingress without local /etc/hosts entries, using –resolve

# test insecure http resolveStr="--resolve $domain:80:$EXTIP_PRIMARY" curl -k $resolveStr https://$fqdn | grep hello # test secure https pull at public loadbalancer # notice that certificate used is self-signed and does not match host resolveStr="--resolve $domain:443:$EXTIP_PRIMARY" curl -kv $resolveStr https://$fqdn 2>&1 | grep -E "subject:|hello"