GKE Autopilot reduces the operational costs of managing GKE clusters by freeing you from node level maintenance, instead focusing just on pod workloads. Costs are accrued based on pod resource consumption and not on node resource sizes or node count, which are managed by Google.

GKE Autopilot reduces the operational costs of managing GKE clusters by freeing you from node level maintenance, instead focusing just on pod workloads. Costs are accrued based on pod resource consumption and not on node resource sizes or node count, which are managed by Google.

Since you no longer own the node level, there are limitations on the pod Linux capability set, privileged pods, external monitoring services, etc. but these are not an issue with most workload types.

In this article, I will show you how to create a private GKE cluster in Autopilot mode using Terraform. The endpoint is private also for enhanced security, so must be managed from a public bastion/jumpbox.

Binary Prerequisites

Install the following binaries to prepare for the installation:

Google Cloud Prerequisites

Create a Google cloud project and subscribe to Anthos per the documentation.

- Login to the cloud console https://console.cloud.google.com with your Google Id

- Enable billing for the GCP project, Hamburger menu > Billing

- Enable the Anthos API, Hamburger menu > Anthos, click ‘Start Trial’

- Click ‘Enable’

From the console, initialize your login context to Google Cloud.

gcloud init

gcloud auth login

Start build

Download my project from github, and start the build.

git clone https://github.com/fabianlee/gcp-gke-clusters-ingress.git cd gcp-gke-clusters-ingress # create unique project id ./generate_random_project_id.sh # select GKE version from REGULAR channel ./select_gke_version.sh # show build steps ./menu.sh

Continue build using menu actions

Follow the menu steps starting at the top and working your way down to create the project, networks, VMs, and finally the “privautopilot” action in order to create the private Autopilot cluster.

project Create gcp project and enable services svcaccount Create service account for provisioning networks Create network, subnets, and firewall cloudnat Create Cloud NAT for public egress of private IP sshmetadata Load ssh key into project metadata vms Create VM instances in subnets enablessh Setup ssh config for bastions and ansible inventory ssh SSH into jumpbox ansibleping Test ansible connection to public and private vms ansibleplay Apply ansible playbook of minimal pkgs/utils for vms gke Create private GKE cluster w/public endpoint autopilot Create private Autopilot cluster w/public endpoint privgke Create private GKE cluster w/private endpoint privautopilot Create private Autopilot cluster w/private endpoint kubeconfigcopy Copy kubeconfig to jumpboxes

Run “kubeconfigcopy” in order to push the newly created kubeconfig to the jumpbox for your use in later steps.

Terraform module for private GKE cluster

The logic for the Autopilot GKE cluster in terraform is found in the main.tf of the gcp-gke-private-autopilot-cluster module.

This is where the google_container_cluster resource structure is defined, and enable_autopilot is set to true.

Using kubectl to access the Autopilot cluster

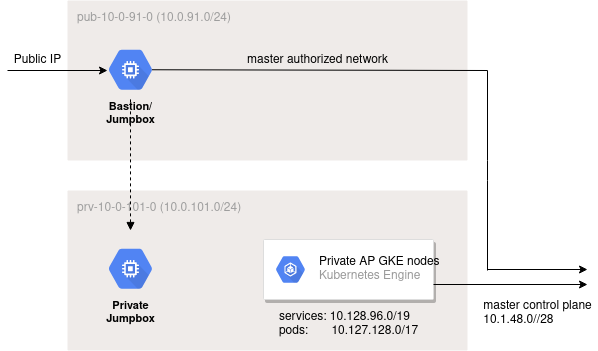

This private Autopilot GKE cluster purposely does not have a public endpoint enabled. To access the cluster you will need to be either:

- In the same subnet as the cluster nodes (10.0.101.0/24)

- Or in a specified master authorized network, which we have set as 10.0.91.0/24

This allows you to use kubectl from either the private jumpbox, or the public bastion/jumpbox in 10.0.91.0/24.

Run the “ssh” command and then select “vm-priv-10-0-101-0”. You will be forwarded through public bastion vm-pub-10-0-91-0 and into the private jumpbox.

The VM is preconfigured with the correct KUBECONFIG value, all you need to do is run “kubectl get nodes” to prove out the connection.

Destroy all GCP Objects

If you do not destroy all GCP objects, you will be charged. These menu items will assist in destroying, but you should do a manual check as well.

delgke Delete GKE public standard cluster delautopilot Delete GKE public Autopilot cluster delprivgke Delete GKE private standard cluster delprivautopilot Delete GKE private Autopilot cluster delvms Delete VM instances delnetworks Delete networks and Cloud NAT

REFERENCES

GCP, comparing Autopilot and Standard modes

GCP, monitoring GKE Autopilot clusters

GCP, gcloud clusers create-auto

dev.to Chabane R., GKE Autopilot cluster with Terraform

hashicorp, news release about Terraform support for AutoPilot

GCP, managed Anthos Service Mesh

GCP, Anthos versions and component versions supported (ASM,ACM,Apigee,etc)

GCP, start installation of manged ASM control plane

GCP, release schedules (rapid,regular,stable)

GCP, zero-touch upgrades with ASM by doing rolling deployment

alwaysupalwayson.com, cloud-armor on GKE LB

searce.com, Anthos and ASM installation and SLO

GCP, annotation ‘cloud.google.com/neg’ for NEG LB

GCP, how Autopilot upgrades work

GCP, receive cluster notifications with pub/sub

NOTES

After initial install of gcloud using apt, upgrade just that package

sudo apt update sudo apt install --only-upgrade google-cloud-sdk -y

Managed ASM, checking for successful provisioning

# should be 'Provisioned'

kubectl get controlplanerevision asm-managed-stable -n istio-system -o=jsonpath='{.status.conditions[?(@.type=="Reconciled")].reason}'

show managed ASM master control plane version

kubectl get ds istio-cni-node -n kube-system -o=jsonpath="{.spec.template.spec.containers[0].image}" | grep -Po "install-cni:\K.*"