Anthos GKE on-prem is a managed platform that brings GKE clusters to on-premise datacenters. This product offering brings best practice security measures, tested paths for upgrades, basic monitoring, platform logging, and full enterprise support.

Anthos GKE on-prem is a managed platform that brings GKE clusters to on-premise datacenters. This product offering brings best practice security measures, tested paths for upgrades, basic monitoring, platform logging, and full enterprise support.

Setting up a platform this extensive requires many steps as officially documented here. However, if you want to practice in a lab or home environment this is possible using an Ubuntu host with 48Gb physical RAM running a nested version of ESXi 7.1U1+ on KVM.

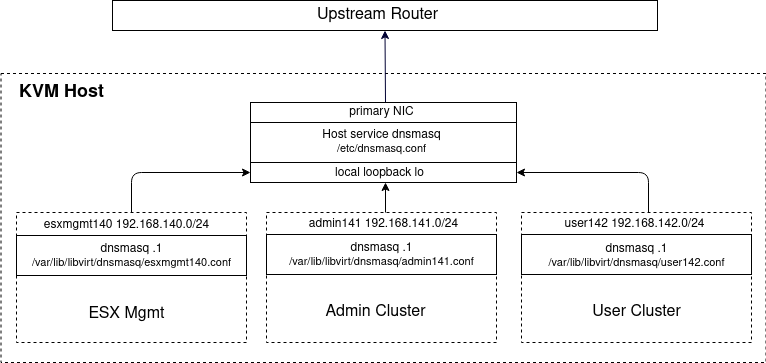

Multiple networks are required for: ESX management, Anthos Admin clusters, and Anthos User clusters. But this can be emulated with KVM routed networks using virtual bridges. Below is a logical diagram of our target Anthos on-prem build.

Google Cloud Prerequisites

Create a Google cloud project and subscribe to Anthos per the documentation.

- Login to the cloud console https://console.cloud.google.com with your Google Id

- Enable billing for the GCP project, Hamburger menu > Billing

- Enable the Anthos API, Hamburger menu > Anthos, click ‘Start Trial’

- Click ‘Enable’

This enablement can also be done with gcloud.

gcloud services enable anthos.googleapis.com

Install Ansible (on Host)

There are many commands and templating steps in this article, I have used Ansible to simplify those commands.

Install Ansible using my article here.

Install KVM (on Host)

Install KVM on your Ubuntu host, see my article here.

This provides a type 1 Hypervisor on your bare metal Ubuntu host where we will virtualize multiple networks and a nested ESXi server.

Install Terraform (on Host)

The VM and networking infrastructure is setup with Terraform wherever possible.

Install Terraform using my article here.

Install dnsmasq service (on Host)

As described in one of my previous articles, use an independent host instance of dnsmasq so that all the KVM routed networks can point at it for upstream DNS resolution. The routed networks we are going to create with KVM in later steps are be able to reach your KVM Host at is primary NIC as shown below.

Install dnsmasq service on KVM host

# is the host dnsmasq service already installed? sudo systemctl status dnsmasq.service --no-pager # if not, then install sudo apt install dnsmasq -y

Use the following lines in “/etc/dnsmasq.conf”, which will limit the binding to the local (lo) and public interface so it does not interfere with the private libvirt bindings.

listen-address=127.0.0.1 # you want a binding to the loopback interface=lo # and additional binding to public interface (e.g. br0,ens192) # I've seen 'unrecognized interface' errors when using interface name # use listen-address as an alternative #interface=ens192 listen-address=<yourNIC_IP> bind-interfaces server=<yourUpstreamDNSIPAddress> log-queries # does not go upstream to resolve addresses ending in 'home.lab' local=/home.lab/

And although you could add custom hostname mappings directly to dnsmasq.conf, dnsmasq also reads “/etc/hosts” as part of its lookup logic, so this is a nice way to easily add entries. Go ahead and add the following entries to “/etc/hosts”.

echo 192.168.140.236 esxi1.home.lab | sudo tee -a /etc/hosts echo 192.168.140.237 vcenter.home.lab | sudo tee -a /etc/hosts echo 192.168.142.253 anthos.home.lab | sudo tee -a /etc/hosts # restart dnsmasq sudo systemctl restart dnsmasq # make sure there are no errors sudo systemctl status dnsmasq

Configure host to use local dnsmasq service

To have your host use this local dnsmasq service, configure your resolv configuration. In older versions of Ubuntu, this typically meant modifying “/etc/resolv.conf”.

However, in newer versions of Ubuntu modifying this file is not the correct method because resolv is managed by Systemd. Instead, you want to modify “/etc/systemd/resolved.conf”.

# view current resolv for each interface resolvectl dns # change 'DNS' to 127.0.0.1 sudo vi /etc/systemd/resolved.conf # restart systemd resolv and then check status sudo systemctl restart systemd-resolved sudo systemctl status systemd-resolved # global DNS should now be '127.0.0.1' pointing to local dnsmasq resolvectl dns # check full settings sudo systemd-resolve --status

Validate

This should allow you to use nslookup or dig against the local dnsmasq service to do either a name resolution or reverse lookup.

$ dig @127.0.0.1 esxi1.home.lab +short 192.168.140.236 $ dig @127.0.0.1 -x 192.168.140.236 +short esxi1.home.lab. $ dig @127.0.0.1 vcenter.home.lab +short 192.168.140.237 $ dig @127.0.0.1 -x 192.168.140.237 +short vcenter.home.lab.

This lookup and reverse lookup is mandatory in order for the vCenter installation to perform correctly.

Tail the syslog to see the most recent DNS queries made.

sudo tail /var/log/syslog -n1000 | grep dnsmasq

Pull github project

In order to start the Anthos installation, go ahead and pull my project code from github.

# required packages sudo apt install git make curl -y # clone project git clone https://github.com/fabianlee/anthos-nested-esx-manual.git # go into project, save directory into 'project_path' variable cd anthos-nested-esx-manual export project_path=$(realpath .)

We will use the files and scripts in this directory throughout the rest of the article as we step through the deployment.

Generate environment specific files

There are certain variables such as GCP project name/id, and host IP address that need to be inserted in the Anthos configuration files. We will use Ansible to define the variables and generate environment specific files from jinja2 templates.

First, modify the Ansible variables per your specific environment, then call the playbook that will generate the local environment specific files.

cd $project_path # modify GCP project Id # the rest of the variables will be default if you have # been following my articles vi group_vars/all # generate environment specific files ansible-playbook playbook_generate_local_files.yml

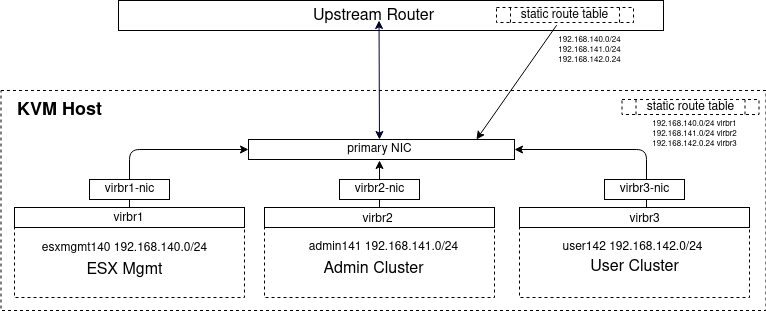

Create 3 routed KVM networks (on host)

Create KVM routed networks for use by esxi host: esxmgmt140, admin141, user142. Do this with the terraform libvirt provider.

cd $project_path/tf-create-local-kvm-routed-networks # should only have 'default' kvm nat, 192.168.122.0/24 virsh net-list # run terraform to create 3 routed KVM networks make

Viewing the host routing table should now show the below

$ route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface ... 192.168.140.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr3 192.168.141.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr2 192.168.142.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr1

And listing the KVM networks should show you the default KVM network at 192.168.122 as well as the 140, 141, and 142 networks you defined.

$ virsh net-list Name State Autostart Persistent ------------------------------------------------ default active yes yes esxmgmt140 active yes yes admin141 active yes yes user142 active yes yes

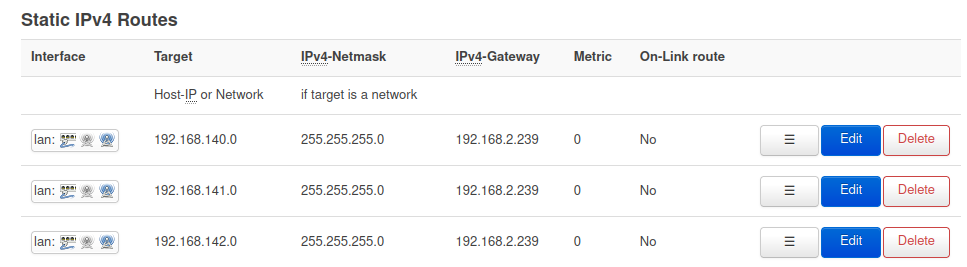

These are routed KVM networks, which means the responsibility for routing these networks falls to you. The easiest way is typically to add 3 static routes on your upstream router that points these subnets back to the public interface of this host server.

Adding these static routes on your upstream router is unfortunately something I cannot show you how to do exactly, because some of you will be in lab environments using commercial routers while others are on consumer routers from varying manufacturers. As an example, below is a screenshot of the static routes of the upstream OpenWRT router in my lab environment.

This points all traffic on 192.168.140, .141, and .142 back to my KVM host network public interface at 192.168.2.239, which knows how to handle these CIDR blocks because of its host routing table shown earlier. The method you use to route these networks is dependent on your network topology and devices.

This is complex enough that I think it’s important to validate that these KVM routed networks have connectivity between them and have the ability to do nslookup properly. I’ve provided a terraform file that can create an Ubuntu instance in each KVM routed network and run a connectivity test.

cd $project_path/tf-test-routed-networks-kvm # show guest hosts currently available virsh list # create 3 KVM Ubuntu guests, each in different network make # show 3 guest hosts now available # creates 'kvm140', 'kvm141', 'kvm142' virsh list # test connectivity to each other, # and forward+reverse resolution to [esxi1,vcenter].home.lab ./run-tests.sh # tear down test vms make destroy

The test goes into each of these guest VMs, and does:

- netcat to port 22 of all the other VMs to prove it can make a connection

- DNS lookups and reverse lookups to verify the ‘esxi1.home.lab’ entries from previous dnsmasq section are valid

- DNS lookup and ping to ‘archive.ubuntu.com’ to prove outside connectivity

You do not have to immediately tear these VM down and delete them. They are helpful when troubleshooting any routing issues between these virtual networks and your upstream router at the KVM level.

As a quick note, if the default KVM network (192.168.122.0/24) forward mode is set to ‘nat’, it is limited to outbound-only connections to these 3 routed networks you just created. I would recommend changing the forward mode = ‘route’ if you want bi-directional access.

# stop any VMs using the network before changing virsh net-dumpxml default # then restart network virsh net-destroy default virsh net-start default

Deploy nested ESXi 7.0 U1+ (from Host)

Use my article on nested ESXi 7.0 lab installation as your guide, but instead of using a single NIC on the default KVM network (192.168.122.0/24), add 3 NIC to the KVM host, all of type vmxnet3 for: esxmgmt140, admin141, and user142.

Use at least a 1500Gb sparse disk. If your default pool location will not accommodate that much space, create another pool.

# show location of 'default' disk pool virsh pool-dumpxml default | grep path # if you need to put this large esxi disk in another location virsh pool-define-as kvmpool --type dir --target /data/kvm/pool

Reserve as much cpu as possible and overallocate your RAM (use more than your host, allow KVM to use OS swap). This nested ESXi will be running a significant load: vCenter, the Anthos Workstation, the Admin Cluster master nodes, and the User Cluster worker nodes.

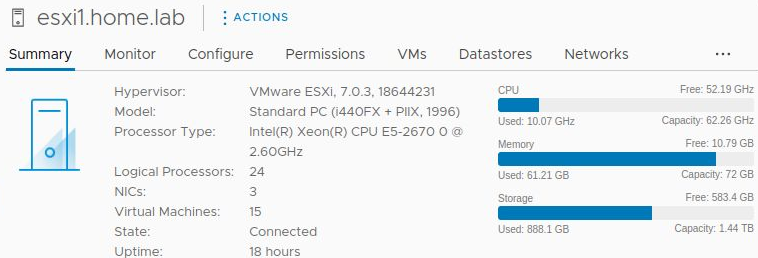

With a 1500Gb sparse disk, 72Gb RAM overcommitted on a 64Gb host, and 24 vcpu overcommited on a 16 cpu host (2 threads/core,4 cores/socket), the screenshot below shows the resources used when the build is complete. CPU is adequate, but it is using 61Gb of RAM.

NOTE: you will need at least 48Gb RAM on your KVM host to deploy this full solution (64Gb would be greatly preferrable). I see performance hiccups from file swapping even at 48Gb RAM with swap on a different SSD than the KVM qcow2 backing file.

Below is the command from my article, modified for 3 NIC on our routed networks, larger vcpu/RAM allocations, and using a custom disk pool named “data”.

iso=~/Downloads/VMware-VMvisor-Installer-7.0U3-18644231.x86_64.iso # use pool that has enough capacity for sparse 1500Gb disk_pool=data # even though disk is sparse, takes a couple of minutes to create virt-install --virt-type=kvm --name=esxi1 --cpu host-passthrough --ram 73728 --vcpus=24 --virt-type=kvm --hvm --cdrom $iso --network network:esxmgmt140,model=vmxnet3 --network network:admin141,model=vmxnet3 --network network:user142,model=vmxnet3 --graphics vnc --video qxl --disk pool=$disk_pool,size=1500,sparse=true,bus=ide,format=qcow2 --boot cdrom,hd,menu=on --noautoconsole --force

That article also shows how to use the latest UEFI firmware, which can only be changed before ESXi installation.

Create Seed VM (from Host)

The seed vm is a KVM Guest VM (a peer of the ESXi server) that we will use to install vCenter as well as the Anthos Admin Workstation.

Let’s use KVM to create a VM in the same subnet as ESXi, 192.168.124.0/22.

# create Ubuntu seed vm at 192.168.124.220 cd $project_path/tf-kvm-seedvm # list of current kvm guests virsh list # create KVM seed VM make # run basic set of ping and outside connectivity tests ./run-tests.sh # now shows 'seedVM' virsh list

Now install basic utilities on the seed VM: gcloud, kubectl, terraform, etc.

# run prequisite ansible scripts cd $project_path # ansible has local dependencies to galaxy modules ansible-playbook install_ansible_dependencies.yml # now install basic utilities needed on seed ansible-playbook playbook_seedvm.yml

Copy additional files to seed (from Host)

Copy the vcsa-esxi.json file used to install vCenter and a convenience script for govc to the seed VM.

# copy additional files needed from host to seed VM

cd $project_path

ansible-playbook playbook_generate_vcenter_files.yml

Prepare seed for vCenter install (from Host)

The KVM domain name of the seed vm is ‘seedvm-192.168.140.220’.

Using my article on vCenter 7.0 installation as a reference, let’s mount the ISO for the vCenter installer. You may need to reboot the seed VM.

# all commands below OR script # cd $project_path/needed_on_vcenter_installer # ./insert-vcenter-installer-iso.sh ~/Downloads/VMware-VCSA-all-7.0.3-18778458.iso seedvm=seedvm-192.168.140.220 # get current device and ISO file being used cdrom=$(virsh domblklist $seedvm --details | grep cdrom | awk '{print $3}') currentISO=$(virsh domblklist $seedvm --details | grep cdrom | awk '{print $4}') # eject current disk virsh change-media $seedvm $cdrom --eject $currentISO # insert vcenter installer iso virsh change-media $seedvm $cdrom --insert ~/Downloads/VMware-VCSA-all-7.0.3-18778458.iso

Install vCenter (from seed)

Now login to the seed VM and mount the cdrom from the OS level.

# ssh into seed vm ssh -i $project_path/tf-kvm-seedvm/id_rsa ubuntu@192.168.140.220 # check nslookup and reverse (MUST work or vcenter will fail!) nslookup esxi1.home.lab nslookup 192.168.140.236 nslookup vcenter.home.lab nslookup 192.168.140.237 # mount vcenter iso sudo mkdir -p /media/iso sudo mount -t iso9660 -o loop /dev/cdrom /media/iso # do installation, takes about 15 min cd /media/iso/vcsa-cli-installer sudo lin64/vcsa-deploy install --no-ssl-certificate-verification --accept-eula --acknowledge-ceip ~/vcsa-esxi.json

Create basic vCenter objects

Validate the vcenter installation as described in my vCenter 7.0 article, and configure the datacenter, cluster, and then add the esxi host. You can use govc to create these object instead of the web UI.

cd ~ source ~/source-govc-vars.sh # create datacenter govc datacenter.create mydc1 # create cluster with DRS enabled (for res pools) govc cluster.create mycluster1 govc cluster.change -drs-enabled mycluster1 # add esxi1 host to cluster # would have used 'host.add' if we wanted it added directly to DC govc cluster.add -cluster mycluster1 -hostname esxi1.home.lab -username root -password ExamplePass@456 -noverify=true -connect=true

Add categories for VM tags (from seed)

In order to add VM tags on each cluster node, the following vSphere categories need to be created.

# tag categories for Anthos VM tracking govc tags.category.create machine.onprem.gke.io/cluster-name govc tags.category.create machine.onprem.gke.io/cluster-name/nodepool-name

We will also add our own custom tag category.

# our custom tag category, with two possible tags govc tags.category.create purpose govc tags.create -c=purpose testing govc tags.create -c=purpose production

Add vCenter service account for Anthos (from seed)

source ~/source-govc-vars.sh # show all current sso users govc sso.user.ls # create anthos@vsphere.local govc sso.user.create -p 'ExamplePass@456' -R Administrators anthos # add anthos user to Administrators groups govc sso.group.update -a anthos Administrators

OR if you prefer to do it from the web UI.

- Login to https://vcenter.home.lab (user/pass=Administrator@vsphere.local/ExamplePass@456)

- Menu > Administration

- Single Sign On > Users and Groups > Users tab

- Domain “vsphere.local”, press “Add”; Username = anthos, first name=anthos, Press Add

- Single Sign On > Users and Groups > Groups tab

- Right click ‘Administrators’ group, select “Edit”. Enter “anthos” in member search, press <enter> to place in group listing. Press “Save”

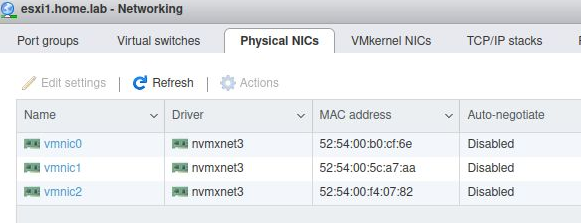

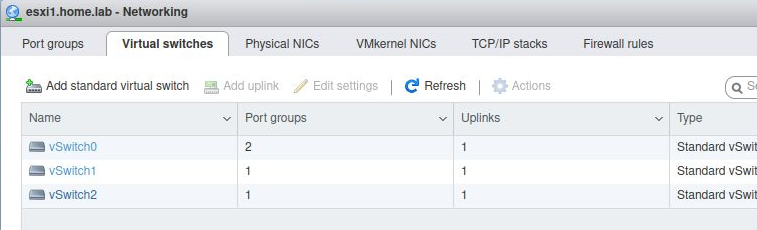

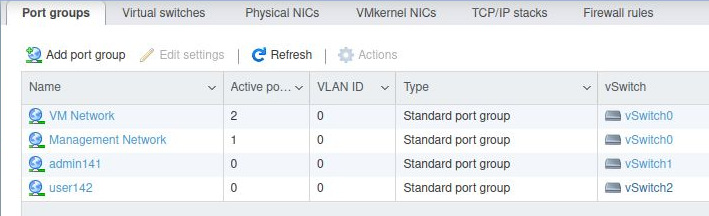

Expose routed networks from vCenter

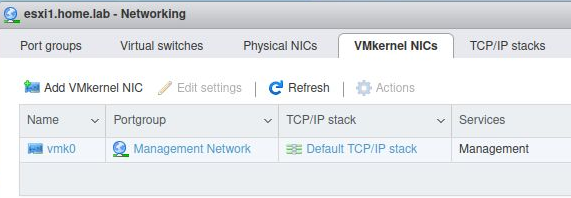

Each of the routed networks needs its own Port group associated with a Virtual Switch. The virtual switch is associated with a different physical NIC. There is only a single vmkernel nic ‘vmk0’.

To do this manually in vcenter, login to vcenter and go to ‘Hosts and Clusters’. Right-click on ‘esxi1.home.lab’ and select ‘Add Networking’.

- Select ‘Virtual Machine Port group for a standard switch’

- new standard switch with 1500 MTU

- Add active adapter ‘vmnic1’

- Network label ‘admin141’, vlan=0

- Finish

Manually repeat for ‘vmnic2’ on ‘user142’. Or you can use add these from the seed VM using govc, as shown below.

# list current switches govc host.vswitch.info # for vmnic1, create switch and add port group 'admin141' govc host.vswitch.add -mtu 1500 -nic vmnic1 vSwitch1 govc host.portgroup.add -vswitch vSwitch1 -vlan 0 admin141 # for vmnic2, create switch and add port group 'user142' govc host.vswitch.add -mtu 1500 -nic vmnic2 vSwitch2 govc host.portgroup.add -vswitch vSwitch2 -vlan 0 user142

When completed successfully, the “Networking” section from the esxi web UI (https://esxi1.home.lab.ui, user=root) should look like below. With three physical NIC.

A single VMkernel NIC.

Three total virtual switches: vSwitch0 (default),vSwitch1,vSwitch2

And two additional Port Group names (admin141,user142)

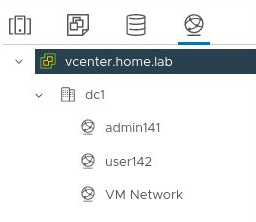

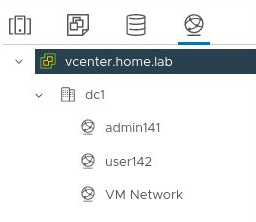

From the vCenter client (https://vcenter.home.lab, user=Administrator@vsphere.local), these same port group names (admin141,user142) will now be shown in the network list.

Testing vsphere networks (from host)

In a previous section we tested the KVM routed networks (esxmgmt140,admin141,user142) by using guests VMs running in the KVM hypervisor. They were all able to communicate freely and use DNS properly.

We should do the same type of test, but with guest VMs provisioned nested inside the nested vSphere hypervisor. One in each of the three vSphere networks below.

Create Ubuntu 20 Focal template needed for testing

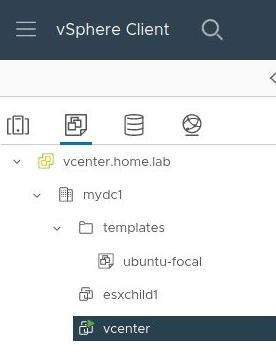

But first we need an Ubuntu Focal 20 template, which I provide instructions for building in this article. This takes about 30 minutes to put together, and when done you should have a vSphere template named “ubuntu-focal”.

When done, you should be able to see the “ubuntu-focal” VM template listed using govc from the seed VM.

# find template type vms govc find -type m

Or from vCenter in the “templates” folder.

Create guest VMs using template

You can now build an Ubuntu VM in each network and run validations to be sure we have the same connectivity for VMs running inside the nested ESXi host.

cd $project_path/tf-test-routed-networks-vsphere # create 3 VMs, one in each vsphere network # named 'vsphere140', 'vsphere141', 'vsphere142' make # test that each can reach the others # and that 'esxi1.home.lab' is dns resolvable ./run-tests.sh

Each of these vms should be able to reach each other as well as the outside world.

They can also reach their own dnsmasq instance at the .1 address of their network. However, they cannot reach the dnsmasq .1 of the other bridged networks. So, if you want a common DNS for them, you must use the IP address of the dnsmasq instance on the KVM host.

Copy files to Seed VM (from Host)

Copy the admin-ws-config.yaml used for installing the Admin Workstation and a set of convenience scripts to the seed VM.

# generate files from templates cd $project_path ansible-playbook playbook_generate_seedvm_files.yml

Configuring GCP project (from seed VM)

Login to the seed VM and setup the GCP project.

# ssh into seed vm ssh -i $project_path/tf-kvm-seedvm/id_rsa ubuntu@192.168.140.220

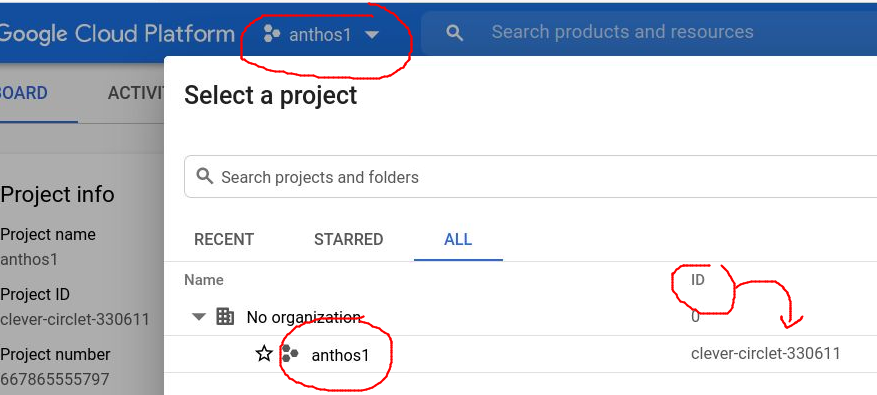

Now from the Google Cloud Console, if you select the project pulldown you can gather the GCP project name and project Id (these are usually different values).

Use this project name to set a Bash variable per below and prepare your GCP project to register an Anthos on-prem cluster.

# ssh into seed vm ssh -i $project_path/tf-kvm-seedvm/id_rsa ubuntu@192.168.140.220 # use your GCP project name (not project id) # based on the screenshot above this would be 'anthos1' export project_name=anthos1 cd ~/seedvm # do all of the below, OR just the 2 scripts directly below # source ./source-gcp-login.sh $project_name # ./enable-gcp-services.sh # workaround for new login procedure DISPLAY=":0" gcloud auth login --no-launch-browser # get project id # based on screenshot above this evaluates to 'clever-circlet-330611' projectId=$(gcloud projects list --filter="name~$project_name" --format='value(project_id)') # set project gcloud config set project $projectId # enable GCP services required for Anthos on-prem gcloud services enable \ anthos.googleapis.com \ anthosgke.googleapis.com \ cloudresourcemanager.googleapis.com \ container.googleapis.com \ gkeconnect.googleapis.com \ gkehub.googleapis.com \ serviceusage.googleapis.com \ stackdriver.googleapis.com \ monitoring.googleapis.com \ logging.googleapis.com # fleet host project for cluster lifecycle from console gcloud services enable \ iam.googleapis.com \ anthosaudit.googleapis.com \ opsconfigmonitoring.googleapis.com \ storage.googleapis.com \ connectgateway.googleapis.com # also need for pulling from private gcr.io registry gcloud services enable containerregistry.googleapis.com # needed starting in 1.9 gcloud service enable opsconfigmonitoring.googleapis.com # needed starting in 1.13 gcloud service enable connectgateway.googleapis.com

GCP Service Account (from seed)

As described in the documentation, you must create a GCP service account referred to as the ‘Component Access‘ service account (it was called the ‘Allow Listed’ account in Anthos 1.4).

cd ~/seedvm # do all of the commands below, OR just the script directly below # ./create-component-access-sa-svcacct.sh # list current service accounts gcloud iam service-accounts list # create service account newServiceAccount="component-access-sa" gcloud iam service-accounts create $newServiceAccount --display-name "anthos allowlisted" --project=$projectId # wait for service account to be fully consistent sleep 45 # get email address form accountEmail=$(gcloud iam service-accounts list --project=$projectId --filter=$newServiceAccount --format="value(email)") # download key gcloud iam service-accounts keys create $newServiceAccount.json --iam-account $accountEmail # path used in admin-ws-config.yml realpath $newServiceAccount.json

This created the file “component-access-sa.json”, which is a key that will be used to gain access to this account in the Anthos configuration files.

Add the IAM roles for this Component Access service account.

cd ~/seedvm # do all of the commands below, OR just the script directly below # ./set-roles-component-access-sa.sh gcloud projects add-iam-policy-binding $projectId --member "serviceAccount:$accountEmail" --role "roles/serviceusage.serviceUsageViewer" gcloud projects add-iam-policy-binding $projectId --member "serviceAccount:$accountEmail" --role "roles/iam.serviceAccountCreator" gcloud projects add-iam-policy-binding $projectId --member "serviceAccount:$accountEmail" --role "roles/iam.roleViewer" # was not asked for in docs, but am having problems with back-off pulling image during admin cluster creation so trying gcloud projects add-iam-policy-binding $projectId --member "serviceAccount:$accountEmail" --role "roles/storage.objectViewer"

There are 2 more service accounts needed during the Anthos installation:

- connect-register service account (connect-register-sa.json)

- logging-monitoring service account (log-mon-sa.json)

But we do not have to create them manually, because during the admin worktation creation, the ‘–auto-create-service-accounts’ flag will have gkeadm create them.

GCP Current Context roles (from seed)

The logged in GCP user must have these roles to auto create the other service accounts (which is needed by gkeadm).

cd ~/seedvm

# all commands below or just script directly below

# ./set-gcp-current-user-iam-roles.sh

gcpUser=$(gcloud config get-value account)

gcloud projects add-iam-policy-binding $projectId --member="user:${gcpUser}" --role="roles/resourcemanager.projectIamAdmin"

gcloud projects add-iam-policy-binding $projectId --member="user:${gcpUser}" --role="roles/serviceusage.serviceUsageAdmin"

gcloud projects add-iam-policy-binding $projectId --member="user:${gcpUser}" --role="roles/iam.serviceAccountCreator"

gcloud projects add-iam-policy-binding $projectId --member="user:${gcpUser}" --role="roles/iam.serviceAccountKeyAdmin"

Create and set vcenter role (from seed)

As described in the documentation, create an ‘anthos’ role and assign it to the ‘anthos’ vcenter service account created earlier.

cd ~ source ~/source-govc-vars.sh # show available resource pools # will only show default '/mydc1/host/mycluster1/Resources' govc find / -type p # create resource pools for admin and user clusters govc pool.create /mydc1/host/mycluster1/admin govc pool.create /mydc1/host/mycluster1/user # show folders govc find / -type f # create vcenter folder for admin workstation, admin and user cluster govc folder.create /mydc1/vm/admin-ws govc folder.create /mydc1/vm/admincluster govc folder.create /mydc1/vm/userclusters # set roles for anthos service account in on-prem vcenter seedvm/set-vcenter7-roles-for-anthos.sh

Get gkeadmin utility (from seed)

Download the latest 1.13 release.

# copy down gkeadm utility cd ~/seedvm gsutil cp gs://gke-on-prem-release/gkeadm/1.13.0-gke.525/linux/gkeadm gkeadm chmod +x gkeadm ./gkeadm version

Do not use gkeadm to ‘create config’, because will be creating a tailored ‘admin-ws-config.yaml’ to this directory in a later step.

Manually import OVA for cluster (from seed)

To prepare for the cluster creation, we will manually import the required OVA. Although we will be using Container OS (cos) for both the Admin and User cluster, gkectl check-config and prepare command still fail if the ubuntu OVA is not there (known issue), so we will import both into the ‘admin-ws’ vcenter folder.

# manually runs gsutil to download and govc to import ./import-cos-ova-template.sh 1.13.0-gke.525 ./import-ubuntu-ova-template.sh 1.13.0-gke.525

You can validate they were imported into the “admin-ws” folder with govc.

govc ls /mydc1/vm/admin-ws

If you look in the vCenter UI, two additional VM templates now exist:

- admin-ws/gke-on-prem-ubuntu-<major>.<minor>-gke.<build>

- admin-ws/gke-on-prem-cos-<major>.<minor>-gke.<build>

An issue I found with gkectl 1.13 was that if you have a vCenter ‘folder’ defined for your admin and/or user clusters, the OS template must also be found in that vCenter folder. It is not sufficient for the template to be located in the “admin-ws” folder as we have done above. Even with “–skip-validation-os-images”, the “gkectl create” will fail unless the OS template is found in that folder.

To workaround this, we will use govc to clone the OS templates into both the Admin and User cluster folders.

version="1.13.0-gke.525"

# clone cos image to both vcenter folders as workaround

govc vm.clone -vm=/mydc1/vm/admin-ws/gke-on-prem-cos-${version} -folder=/mydc1/vm/admincluster -link=true -on=false -template=true gke-on-prem-cos-${version

govc vm.clone -vm=/mydc1/vm/admin-ws/gke-on-prem-cos-${version} -folder=/mydc1/vm/userclusters -link=true -on=false -template=true gke-on-prem-cos-${version}

# clone ubuntu image to both vcenter folders as workaround

govc vm.clone -vm=/mydc1/vm/admin-ws/gke-on-prem-ubuntu-${version} -folder=/mydc1/vm/admincluster -link=true -on=false -template=true gke-on-prem-ubuntu-${version

govc vm.clone -vm=/mydc1/vm/admin-ws/gke-on-prem-ubuntu-${version} -folder=/mydc1/vm/userclusters -link=true -on=false -template=true gke-on-prem-ubuntu-${version}

Get vcenter CA cert (from seed)

cd ~/seedvm # all commands below, OR script directly below # ./get-vcenter-ca.sh # get vcenter certs wget --no-check-certificate https://vcenter.home.lab/certs/download.zip unzip download.zip find certs # smoke test cert to ensure validity openssl x509 -in certs/lin/139b6ea5.0 -text -noout | grep Subject # copy file to standardized name cp certs/lin/139b6ea5.0 vcenter.ca.pem

Create Admin Workstation VM (from seed)

The Anthos admin and user cluster will not be deployed from this seed VM. Instead, Anthos requires us to create a guest VM called the “Admin Workstation”.

It is from this standardized “Admin Workstation” that we do the work of creating admin and user clusters. Below is the ‘gkeadm’ command that checks local and GCP prerequisites, and then uses the vCenter API to create the Admin Workstation.

cd ~/seedvm

# remove any older AdminWS keys before starting

rm -f ~/.ssh/gke-admin-workstation*

truncate -s0 ~/.ssh/known_hosts

# create admin workstation

# needs to download 8G OVA and upload to vSphere

$ ./gkeadm create admin-workstation --config admin-ws-config.yaml -v 3 --auto-create-service-accounts

Using config file "admin-ws-config.yaml"...

Running preflight validations...

- Validation Category: Tools

- [SUCCESS] gcloud

- [SUCCESS] ssh

- [SUCCESS] ssh-keygen

- [SUCCESS] scp

- Validation Category: Config Check

- [SUCCESS] Config

- Validation Category: SSH Key

- [SUCCESS] SSH key path

- Validation Category: Internet Access

- [SUCCESS] Internet access to required domains

- Validation Category: GCP Access

- [SUCCESS] Read access to GKE on-prem GCS bucket

- Validation Category: vCenter

- [SUCCESS] Credentials

- [SUCCESS] vCenter Version

- [SUCCESS] ESXi Version

- [SUCCESS] Datacenter

- [SUCCESS] Datastore

- [SUCCESS] Resource Pool

- [SUCCESS] Folder

- [SUCCESS] Network

- [SUCCESS] Datadisk

All validation results were SUCCESS.

Reusing VM template "gke-on-prem-admin-appliance-vsphere-1.13.0-gke.525" that already exists in vSphere.

Creating admin workstation VM "gke-admin-ws"... /

Creating admin workstation VM "gke-admin-ws"... DONE

Waiting for admin workstation VM "gke-admin-ws" to be assigned an IP.... DONE

******************************************

Admin workstation VM successfully created:

- Name: gke-admin-ws

- IP: 192.168.140.221

- SSH Key: /home/ubuntu/.ssh/gke-admin-workstation

******************************************

gkectl version

gkectl 1.13.0-gke.525 (git-17a2a7eaf)

Add --kubeconfig to get more version information.

docker version

Client:

Version: 20.10.11

API version: 1.41

Go version: go1.16.2

Git commit: 20.10.11-0ubuntu0~20.04.1~anthos1

Built: Fri Jan 14 01:02:39 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Server:

Engine:

Version: 20.10.11

API version: 1.41 (minimum version 1.12)

Go version: go1.16.2

Git commit: 20.10.11-0ubuntu0~20.04.1~anthos1

Built: Thu Jan 13 13:34:48 2022

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.6-0ubuntu0~20.04.1~gke1

GitCommit:

runc:

Version: 1.0.0~rc95-0ubuntu1~20.04.1~anthos1

GitCommit:

docker-init:

Version: 0.19.0

GitCommit:

Checking NTP server on admin workstation...

timedatectl

Local time: Mon 2022-10-10 20:54:24 UTC

Universal time: Mon 2022-10-10 20:54:24 UTC

RTC time: Mon 2022-10-10 20:54:24

Time zone: Etc/UTC (UTC, +0000)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no

Getting component access service account...

Creating other service accounts and JSON key files...

- connect-register-sa-2210102054

- log-mon-sa-2210102054

Enabling APIs...

- project anthos1-365115 (for component-access-sa)

- serviceusage.googleapis.com

- iam.googleapis.com

- cloudresourcemanager.googleapis.com

Configuring IAM roles for service accounts...

- log-mon-sa-2210102054 for project anthos1-365115

- roles/stackdriver.resourceMetadata.writer

- roles/opsconfigmonitoring.resourceMetadata.writer

- roles/logging.logWriter

- roles/monitoring.metricWriter

- roles/monitoring.dashboardEditor

- component-access-sa for project anthos1-365115

- roles/serviceusage.serviceUsageViewer

- roles/iam.serviceAccountViewer

- roles/iam.roleViewer

- roles/compute.viewer

- connect-register-sa-2210102054 for project anthos1-365115

- roles/gkehub.editor

- roles/serviceusage.serviceUsageViewer

Preparing "credential.yaml" for gkectl...

Copying files to admin workstation...

- vcenter.ca.pem

- component-access-sa.json

- connect-register-sa-2210102054.json

- log-mon-sa-2210102054.json

- /tmp/gke-on-prem-vcenter-credentials3767753755/credential.yaml

Preparing "admin-cluster.yaml" for gkectl...

Preparing "user-cluster.yaml" for gkectl...

********************************************************************

Admin workstation is ready to use.

WARNING: file already exists at "/home/ubuntu/seedvm/gke-admin-ws". Overwriting.

Admin workstation information saved to /home/ubuntu/seedvm/gke-admin-ws

This file is required for future upgrades

SSH into the admin workstation with the following command:

ssh -i /home/ubuntu/.ssh/gke-admin-workstation ubuntu@192.168.140.221

********************************************************************

If you examine the output, you can see the json files for the service accounts were copied directly over to the Admin Workstation.

...

Copying files to admin workstation...

- vcenter.ca.pem

- anthos-allowlisted.json

- connect-register-sa-2210102054.json

- log-mon-sa-2210102054.json

- /tmp/gke-on-prem-vcenter-credentials3767753755/credential.yaml

...

And if you look in vcenter, the ‘admin-ws’ folder now contains the vm ‘gke-admin-ws’. And the vm template ‘gke-on-prem-admin-appliance-vsphere-<major>.<minor>-gke.<build>’.

Manually cat the private key of the admin workstation so we can use it from our host, and not just the guest seed VM.

# cat private key then exit seed vm cat /home/ubuntu/.ssh/gke-admin-workstation # exit ssh, go back to host exit

Copy files to Admin Workstation (from host)

Now back at our host, paste the Admin Workstation private key so we can reach it directly (instead of needing to go through the seed VM). Then take the template files and use ansible to generate environment specific files on the Admin Workstation.

# put adminws ssh key so we can ssh directly to admin ws cd $project_path/needed_on_adminws # paste in content manually vi gke-admin-workstation # place stricter key permissions chmod 400 gke-admin-workstation cd $project_path # install minimal set of OS packages and settings ansible-playbook playbook_adminws.yml # generate environment specific files ansible-playbook playbook_generate_adminws_files.yml

Login to Admin Workstation (from host)

Now that we have the adminws ssh private key available on our host, ssh directly to the Admin WS. The seed VM served its purpose, and we will not use it in this article again.

cd $project_path/needed_on_adminws # login to admin ws ssh -i $project_path/needed_on_adminws/gke-admin-workstation ubuntu@192.168.140.221 # standardize json key names, so they work with prepared files mv connect-register-sa-*.json connect-register-sa.json mv log-mon-sa-*.json log-mon-sa.json # look at file listing on admin ws ls -l

You will find a listing that looks similiar to below, where many of the files were copied over from the seed VM.

- ‘admin-cluster.yaml’ for creating admin cluster

- ‘admin-block.yaml’ IP addresses for admin cluster

- ‘user-cluster.yaml’ for creating user cluster

- ‘user-block.yaml’ IP addresses for seesaw

- ‘vcenter-ca.pem’ vcenter CA cert

- ‘component-access-sa.json’ anthos -allowlisted service account json

- ‘connect-register-sa.json’ gcp service account key

- ‘log-mon-sa.json’ gcp service account key for stackdriver

- ‘credential.yaml’ – vCenter and private registry credentials

Finally, increase the ssh timeout for the Admin Workstation, or else you will get timeouts on long-runnng gkectl commands. This does not change the current ssh session settions, just subsequent ones.

# all commands below or script directly below # ./adminws_ssh_increase_timeout.sh # change the ssh timeout for long running gke operations sudo sed -i 's/^ClientAliveInterval.*/ClientAliveInterval 60/' /etc/ssh/sshd_config sudo sed -i 's/^ClientAliveCountMax.*/ClientAliveCountMax 240/' /etc/ssh/sshd_config # TMOUT will also cause the ssh client to timeout cd /etc/profile.d && sudo sed -i 's/TMOUT=.*/TMOUT=600/' $(grep -sr ^TMOUT -l) # restart ssh service sudo systemctl reload sshd

Validate configuration files for Admin Cluster (from Admin WS)

# validate version

$ gkectl version

# run config check

$ gkectl check-config --config admin-cluster.yaml --fast -v 3

- Validation Category: Config Check

- [SUCCESS] Config

- Validation Category: Internet Access

- [SUCCESS] Internet access to required domains

- Validation Category: GCP

- [SUCCESS] GCP service

- [SUCCESS] GCP service account

- [SUCCESS] Cluster location

- Validation Category: GKE Hub

- [SUCCESS] GKE Hub

- Validation Category: Container Registry

- [SUCCESS] Docker registry access

- Validation Category: VCenter

- [SUCCESS] Credentials

- [SUCCESS] vCenter Version

- [SUCCESS] ESXi Version

- [SUCCESS] Datacenter

- [SUCCESS] Datastore

- [SUCCESS] Resource pool

- [SUCCESS] Folder

- [SUCCESS] Network

- [SUCCESS] Data disk

- Validation Category: MetalLB

- [SUCCESS] MetalLB validation

- Validation Category: Network Configuration

- [SUCCESS] CIDR, VIP and static IP (availability and overlapping)

- Validation Category: DNS

- [SUCCESS] DNS (availability)

- Validation Category: TOD

- [SUCCESS] Local TOD (availability)

- Validation Category: VIPs

- [SUCCESS] Ping (availability)

- Validation Category: Node IPs

- [SUCCESS] Ping (availability)

- Validation Category: Datacenter Name

- [SUCCESS] Datacenter Name

- Validation Category: Cluster Name

- [SUCCESS] Cluster Name

- Validation Category: Datastore Name

- [SUCCESS] Datastore Name

- Validation Category: Network Name

- [SUCCESS] Network Name

All validation results were SUCCESS.

Prepare for Admin Cluster (from Admin WS)

Prepares for the admin cluster install by doing prerequisite checks, uploading any ova images, and checking the bundle cache.

Since we already uploaded the OVA in an earlier step, most of the time is spent checking the bundle cache.

# takes about 10 minutes

$ gkectl prepare --config admin-cluster.yaml -v 3

Reading config with version "v1"

- Validation Category: Config Check

- [SUCCESS] Config

- Validation Category: OS Images

- [SUCCESS] Admin cluster OS images exist

- Validation Category: Internet Access

- [SUCCESS] Internet access to required domains

- Validation Category: GCP

- [SUCCESS] GCP service

- [SUCCESS] GCP service account

- Validation Category: Container Registry

- [SUCCESS] gcr.io/gke-on-prem-release access

- [SKIPPED] Docker registry access: No registry config specified

- Validation Category: VCenter

- [SUCCESS] Credentials

- [SUCCESS] vCenter Version

- [SUCCESS] ESXi Version

- [SUCCESS] Datacenter

- [SUCCESS] Datastore

- [SUCCESS] Resource pool

- [SUCCESS] Folder

- [SUCCESS] Network

Some validations were SKIPPED. Check the report above.

Decompressing GKE On-Prem Bundle:

"/var/lib/gke/bundles/gke-onprem-vsphere-1.13.0-gke.525-full.tgz"......

DONE

Logging in to gcr.io/gke-on-prem-release

Finished preparing the container images.

Reusing VM template "gke-on-prem-ubuntu-1.13.0-gke.525" that already exists in vSphere.

Reusing VM template "gke-on-prem-cos-1.13.0-gke.525" that already exists in vSphere.

Finished preparing the OS images.

Successfully prepared the environment.

The prepare can take a while (20 minutes), you can tail the logs from another session to the Admin WS to monitor progress in more detail.

# tail from another session to monitor progress of gkectl tail -f logs/*

Create load balancer for admin cluster

We are using MetalLB for this new Anthos 1.13 deployment. MetalLB is an in-cluster loadbalancer, so in constrast to SeeSaw, there is no need to create seperate VMs using ‘gekctl create load-balancer’.

The MetalLB solution will be deployed in-cluster as a Daemonset during admin cluster creation in the next section.

Create admin cluster (from Admin WS)

It is now time to create your Anthos Admin Cluster that will manage all the user clusters as well as serve as the control plane for all user clusters.

# takes about 45 minutes

$ gkectl create admin --config admin-cluster.yaml -v 3

Reading config with version "v1"

Creating cluster "gkectl" ...

DEBUG: docker/images.go:67] Pulling image: gcr.io/gke-on-prem-release/kindest/node:v0.14.0-gke.2-v1.24.2-gke.1900 ...

✓ Ensuring node image (gcr.io/gke-on-prem-release/kindest/node:v0.14.0-gke.2-v1.24.2-gke.1900) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Waiting ≤ 5m0s for control-plane = Ready ⏳

• Ready after 27s 💚

Waiting for external cluster control plane to be healthy... DONE

Applying admin bundle to external cluster

Applying Bundle CRD YAML... DONE

Applying Bundle CRs... DONE

...

- Validation Category: Config Check

- [SUCCESS] Config

- Validation Category: OS Images

- [SUCCESS] Admin cluster OS images exist

- Validation Category: Internet Access

- [SUCCESS] Internet access to required domains

- Validation Category: GCP

- [SUCCESS] GCP service

- [SUCCESS] GCP service account

- [SUCCESS] Cluster location

- Validation Category: GKE Hub

- [SUCCESS] GKE Hub

- Validation Category: Container Registry

- [SUCCESS] Docker registry access

- Validation Category: VCenter

- [SUCCESS] Credentials

- [SUCCESS] vCenter Version

- [SUCCESS] ESXi Version

- [SUCCESS] Datacenter

- [SUCCESS] Datastore

- [SUCCESS] Resource pool

- [SUCCESS] Folder

- [SUCCESS] Network

- [SUCCESS] Data disk

- Validation Category: MetalLB

- [SUCCESS] MetalLB validation

- Validation Category: Network Configuration

- [SUCCESS] CIDR, VIP and static IP (availability and overlapping)

- Validation Category: DNS

- [SUCCESS] DNS (availability)

- Validation Category: TOD

- [SUCCESS] Local TOD (availability)

- Validation Category: VIPs

- [SUCCESS] Ping (availability)

- Validation Category: Node IPs

- [SUCCESS] Ping (availability)

- Validation Category: Datacenter Name

- [SUCCESS] Datacenter Name

- Validation Category: Cluster Name

- [SUCCESS] Cluster Name

- Validation Category: Datastore Name

- [SUCCESS] Datastore Name

- Validation Category: Network Name

- [SUCCESS] Network Name

All validation results were SUCCESS.

...

Waiting for admin cluster "gke-admin-27kn2" to be ready... DONE

Creating or updating control plane machine...

Creating or updating credentials for cluster control plane...

Rebooting admin node machines...

Creating or updating addon machines...

Rebooting admin node machines...

Cluster is running...

Creating dashboards in Cloud Monitoring

Skipping admin cluster backup since clusterBackup section is not set in admin cluster seed config

Registering admin cluster... DONE

Done provisioning admin cluster. You can access it with `kubectl --kubeconfig kubeconfig`

Cleaning up external cluster... DONE

At the end, you will have these vms in vcenter:

- gke-admin-master-xxxx

- gke-admin-node-yyy-zzz

- gke-admin-node-yyy-zzz

And a file ‘kubeconfig’ in the directory. Try a kubectl command that shows the current admin nodes and their version:

# point to Admin Cluster export KUBECONFIG=$(realpath kubeconfig) $ kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME admin-host1 Ready control-plane,master 113m v1.24.2-gke.1900 192.168.141.222 192.168.141.222 Container-Optimized OS from Google 5.10.133+ containerd://1.6.6 admin-host2 Ready 97m v1.24.2-gke.1900 192.168.141.223 192.168.141.223 Container-Optimized OS from Google 5.10.133+ containerd://1.6.6 admin-host3 Ready 97m v1.24.2-gke.1900 192.168.141.224 192.168.141.224 Container-Optimized OS from Google 5.10.133+ containerd://1.6.6

Notice the IP addresses in the wide listing are the IP from the admin-block.yaml.

Now ssh into an Admin Cluster master node. Use kubectl to get the secret and decode it.

# create key

kubectl get secrets -n kube-system sshkeys -o jsonpath='{.data.vsphere_tmp}' | base64 -d > ~/.ssh/admin-cluster.key && chmod 600 ~/.ssh/admin-cluster.key

# get count of images on node

ssh -i ~/.ssh/admin-cluster.key 192.168.141.222 "sudo ctr ns list; sudo ctr -n k8s.io images ls | wc -l"

View the in-cluster MetalLB deployment and its controlling ConfigMap.

$ kubectl get -n kube-system ds/metallb-speaker

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

metallb-speaker 3 3 3 3 3 kubernetes.io/os=linux,onprem.gke.io/lbnode=true 35m

# MetalLB configmap used to assign space

$ kubectl get -n kube-system configmap/metallb-config -o=jsonpath="{.data.config}"

address-pools:

- name: admin-addons-ip

protocol: layer2

addresses:

- 192.168.141.251/32

avoid-buggy-ips: false

auto-assign: false

Configure CoreDNS for the Admin Cluster (from AdminWS)

Starting in Anthos 1.9, CoreDNS replaced KubeDNS. You will need to configure the Kubernetes ‘ClusterDNS’ object on the Admin Cluster so that internal DNS entries in the ‘home.lab’ domain are resolvable.

# from the AmdminWS export KUBECONFIG=$(realpath kubeconfig) # apply DNS settings to 'ClusterDNS' object kubectl apply -f configure-clusterdns.yaml # deploy dns test pod, wait for deployment to settle kubectl apply -f k8s/dns-troubleshooting.yaml sleep 30 # this internal name should resolve to internal 10.100 IP kubectl exec -i -t dnsutils -- nslookup kubernetes.default # this public address should resolve to Google public IP kubectl exec -i -t dnsutils -- nslookup www.google.com # 'home.lab' domain should resolve to 192.168.140.237 # can take a minute or two for DNS to resolve correctly kubectl exec -i -t dnsutils -- nslookup vcenter.home.lab

If these name resolutions do not work, the User Cluster will not deploy properly.

Check config file for User Cluster (from Admin WS)

With the Admin Cluster now built, it is time to switch over to the User Cluster build.

This will use the prepared files: user-cluster.yaml and user-block.yaml

NOTE: the loadBalancer.vips.controlPlaneVIP in user-cluster.yaml sits in the admin141 network because the kubeapi for the User Cluster is exposed from the Admin Cluster (Admin Cluster is where the User cluster control plane is located). All other IP addresses related to User Cluster are in the user142 network.

# takes about 5 minutes

$ gkectl check-config --kubeconfig ./kubeconfig --config user-cluster.yaml --fast -v 3

- Validation Category: Config Check

- [SUCCESS] Config

- Validation Category: OS Images

- [SUCCESS] Admin cluster OS images exist

- Validation Category: Internet Access

- [SUCCESS] Internet access to required domains

- Validation Category: GCP

- [SUCCESS] GCP service

- [SUCCESS] GCP service account

- Validation Category: GKE Hub

- [SUCCESS] GKE Hub

- Validation Category: Container Registry

- [SUCCESS] Docker registry access

- Validation Category: VCenter

- [SUCCESS] Credentials

- [SUCCESS] VSphere CSI Driver

- [SUCCESS] vCenter Version

- [SUCCESS] ESXi Version

- [SUCCESS] Datacenter

- [SUCCESS] Datastore

- [SUCCESS] Resource pool

- [SUCCESS] Folder

- [SUCCESS] Network

- [SUCCESS] VSphere Datastore FreeSpace

- [SUCCESS] Datastore

- [SUCCESS] Tags

- Validation Category: MetalLB

- [SUCCESS] MetalLB validation

- Validation Category: Network Configuration

- [SUCCESS] CIDR, VIP and static IP (availability and overlapping)

- Validation Category: DNS

- [SUCCESS] DNS (availability)

- Validation Category: TOD

- [SUCCESS] Local TOD (availability)

- Validation Category: VIPs

- [SUCCESS] Ping (availability)

- Validation Category: Node IPs

- [SUCCESS] Ping (availability)

- Validation Category: HSM Secrets Encryption

- [SKIPPED] HSM Secrets Encryption: No HSM secrets encryption config specified

- Validation Category: NodePool Autoscale

- [SUCCESS] User cluster nodepool autoscale config for a user cluster

- Validation Category: Datacenter Name

- [SUCCESS] Datacenter Name

- Validation Category: Cluster Name

- [SUCCESS] Cluster Name

- Validation Category: Datastore Name

- [SUCCESS] Datastore Name

- Validation Category: Network Name

- [SUCCESS] Network Name

Some validations were SKIPPED. Check the report above.

Create load balancer for user cluster

We are using MetalLB for this Anthos 1.13 deployment. MetalLB is an in-cluster loadbalancer, so in constrast to SeeSaw, there is no need to create seperate VMs using ‘gekctl create load-balancer’.

The MetalLB solution will be deployed in-cluster as a Daemonset during user cluster creation in the next section.

Create User Cluster (from Admin WS)

# takes about 45 minutes

$ gkectl create cluster --kubeconfig ./kubeconfig --config user-cluster.yaml -v 3

Reading config with version "v1"

- Validation Category: Config Check

- [SUCCESS] Config

- Validation Category: OS Images

- [SUCCESS] Admin cluster OS images exist

- Validation Category: Reserved IPs

- [SUCCESS] Admin cluster reserved IP for new user master

- Validation Category: Internet Access

- [SUCCESS] Internet access to required domains

- Validation Category: GCP

- [SUCCESS] GCP service

- [SUCCESS] GCP service account

- Validation Category: GKE Hub

- [SUCCESS] GKE Hub

- Validation Category: Container Registry

- [SUCCESS] Docker registry access

- Validation Category: VCenter

- [SUCCESS] Credentials

- [SUCCESS] VSphere CSI Driver

- [SUCCESS] vCenter Version

- [SUCCESS] ESXi Version

- [SUCCESS] Datacenter

- [SUCCESS] Datastore

- [SUCCESS] Resource pool

- [SUCCESS] Folder

- [SUCCESS] Network

- [SUCCESS] VSphere Datastore FreeSpace

- [SUCCESS] Datastore

- [SUCCESS] Tags

- Validation Category: MetalLB

- [SUCCESS] MetalLB validation

- Validation Category: Network Configuration

- [SUCCESS] CIDR, VIP and static IP (availability and overlapping)

- Validation Category: DNS

- [SUCCESS] DNS (availability)

- Validation Category: TOD

- [SUCCESS] Local TOD (availability)

- Validation Category: VIPs

- [SUCCESS] Ping (availability)

- Validation Category: Node IPs

- [SUCCESS] Ping (availability)

- Validation Category: HSM Secrets Encryption

- [SKIPPED] HSM Secrets Encryption: No HSM secrets encryption config specified

- Validation Category: NodePool Autoscale

- [SUCCESS] User cluster n odepool autoscale config for a user cluster

- Validation Category: Datacenter Name

- [SUCCESS] Datacenter Name

- Validation Category: Cluster Name

- [SUCCESS] Cluster Name

- Validation Category: Datastore Name

- [SUCCESS] Datastore Name

- Validation Category: Network Name

- [SUCCESS] Network Name

Updating platform to "1.13.0-gke.525" if needed... -

Updating platform to "1.13.0-gke.525" if needed... DONE

Skipping admin cluster backup since clusterBackup section is not set in admin cluster seed config

Waiting for user cluster "user1" to be ready... -

Waiting for user cluster "user1" to be ready... DONE

Creating or updating master node: hasn't been seen by controller yet...

Creating or updating master node: 0/1 replicas are ready...

Creating or updating cluster control plane workloads: deploying

user-kube-apiserver-base, user-control-plane-base,

user-control-plane-clusterapi-vsphere, user-control-plane-etcddefrag: 0/1

statefulsets are ready...

Creating or updating cluster control plane workloads: deploying

user-control-plane-etcddefrag, user-control-plane-base,

user-control-plane-clusterapi-vsphere...

Creating or updating cluster control plane workloads...

Creating or updating node pools: pool-1: hasn't been seen by controller yet...

Creating or updating node pools: pool-1: 0/3 replicas are ready...

Creating or updating node pools: pool-1: 1/3 replicas are ready...

Creating or updating addon workloads...

Creating or updating addon workloads: 37/44 pods are ready...

Creating or updating addon workloads: 37/45 pods are ready...

Cluster is running...

Skipping admin cluster backup since clusterBackup section is not set in admin cluster seed config

Done provisioning user cluster "user1". You can access it with `kubectl --kubeconfig user1-kubeconfig`

You will see 3 new VMS representing the User Cluster worker nodes: “pool-1-xxx.yyy” and one additional VM on the Admin Cluster which is the control plane for the user1 cluster: “user1-x-yyyy”

And a new file ‘user1-kubeconfig’, which can be used to reach the User Cluster with kubectl.

# user cluster kubeconfig $ export KUBECONFIG=$(realpath user1-kubeconfig) $ kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME user-host1 Ready 11m v1.24.2-gke.1900 192.168.142.230 192.168.142.230 Container-Optimized OS from Google 5.10.133+ containerd://1.6.6 user-host2 Ready 11m v1.24.2-gke.1900 192.168.142.231 192.168.142.231 Container-Optimized OS from Google 5.10.133+ containerd://1.6.6 user-host3 Ready 11m v1.24.2-gke.1900 192.168.142.232 192.168.142.232 Container-Optimized OS from Google 5.10.133+ containerd://1.6.6

The IP addresses match the range from user-block.yaml.

Now ssh into a user node. Use kubectl to get the secret and decode it.

# create key for user cluster

kubectl --kubeconfig kubeconfig get secrets -n user1 ssh-keys -o jsonpath='{.data.ssh\.key}' | base64 -d > ~/.ssh/user1.key && chmod 600 ~/.ssh/user1.key

# get count of images running on user1 worker node

ssh -i ~/.ssh/user1.key 192.168.142.230 "sudo ctr ns list; sudo ctr -n k8s.io images ls | wc -l"

The Kubernentes control plane for the user cluster is on the Admin Cluster. So the MetalLB kubeAPI endpoint is found on the admin cluster.

# user control plane is found in admin cluster $ kubectl --kubeconfig kubeconfig -n user1 get service kube-apiserver NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-apiserver LoadBalancer 10.100.41.137 192.168.141.245 443:30540/TCP,8132:30327/TCP 29m

The “label1: foo” and “environment: mytesting” labels specified in user-config.yaml are attached to each worker node.

kubectl --kubeconfig user1-kubeconfig get nodes --show-labels

The VMs associated and tagged at the vSphere level as belonging to the “user1” cluster can be queried with govc using the following snippet.

# from Host cd $project_path/govc source source-govc-vars.sh # query tags to find those tagged with 'user1' folder=/mydc1/vm/userclusters cluster=user1 for vmpath in $(govc ls $folder); do if govc tags.attached.ls -r $vmpath | grep -q $cluster; then echo "$vmpath is associated with $cluster cluster"; fi; done

Configure CoreDNS for the User Cluster (from AdminWS)

Starting in Anthos 1.9, CoreDNS replaced KubeDNS. You will need to configure the Kubernetes ‘ClusterDNS’ object on the Admin Cluster so that internal DNS entries in the ‘home.lab’ domain are resolvable.

# apply DNS settings to 'ClusterDNS' object kubectl apply -f configure-clusterdns.yaml # deploy dns test pod, wait for deployment to settle kubectl apply -f k8s/dns-troubleshooting.yaml sleep 30 # this internal name should resolve to internal 10.100 IP kubectl exec -i -t dnsutils -- nslookup kubernetes.default # this public address should resolve to Google public IP kubectl exec -i -t dnsutils -- nslookup www.google.com

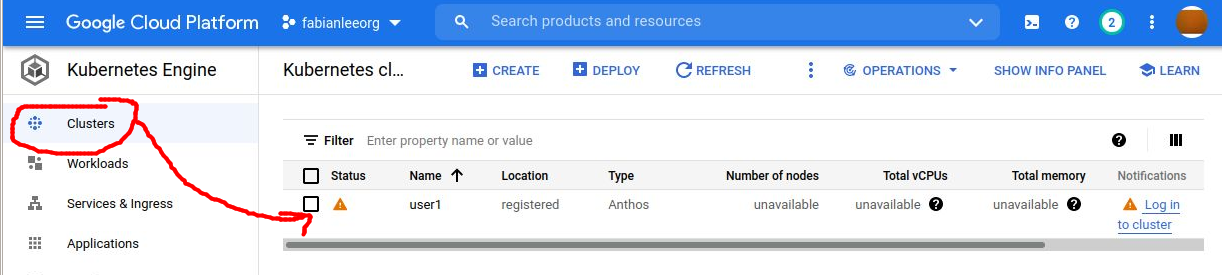

Connecting cluster to console UI

Now that the cluster is created and registered with GCP, you should go into the web console, console.cloud.google.com and establish a login context so you have visibility into cluster health and details.

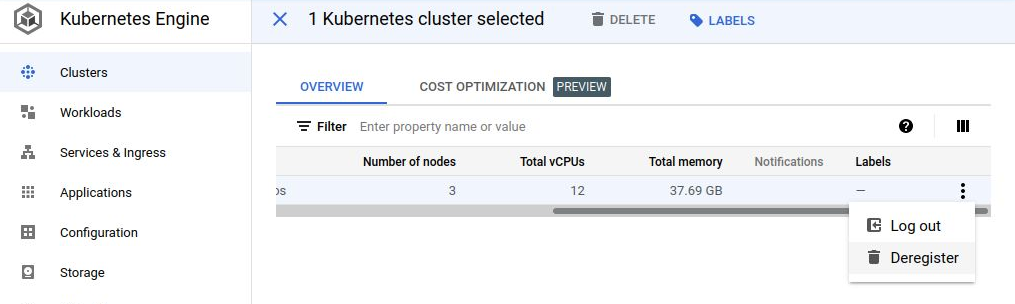

Click on the hamburger menu > Kubernetes Engine > Clusters. And click into the ‘users1’ cluster just created.

If you click into the cluster link, it is going to prompt you to authenticate with a list of several methods. While you could use your personal Google identity, this is not an ideal solution and you should use a token which is not tied to your personal identity.

Generating a token from a service account (from Admin Workstation)

This procedure is documented on the official page here. Note that since Kubernetes 1.24/Anthos 1.13, secrets are not automatically bound to a service account, they are manually created [1,2].

# from Admin WS cd ~/k8s ./generate-token-for-console-1.24.sh

A decoded token is output and this is the value you should paste into the dialog after you press “Login” at the web console for authentication with a token.

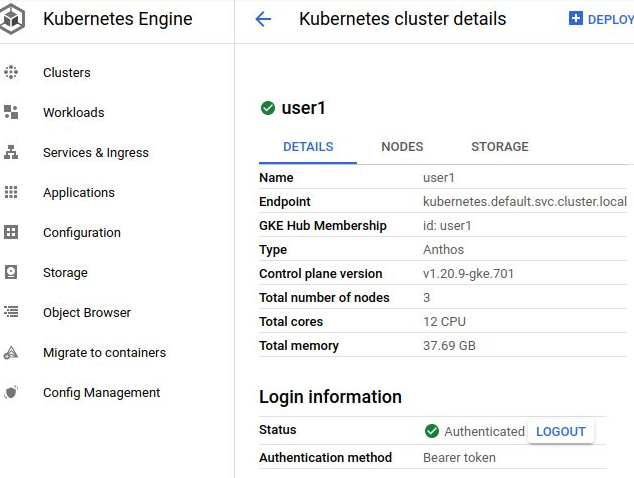

The “user1” cluster should have a green checkbox icon beside it now, and if you click on the “user1” cluster, you will see a details page similar to below.

Validate User Cluster service (from Admin WS)

Per the documentation here we will create a deployment of the Hello application. And then create a service which will expose it via the User Cluster load balancer.

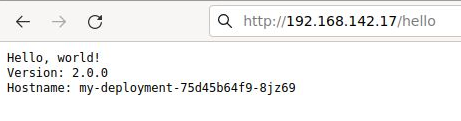

$ cd ~/k8s # create deployment and pods $ kubectl apply -f my-deployment.yaml deployment.apps/my-deployment created # view status of deployment $ kubectl get deployment my-deployment NAME READY UP-TO-DATE AVAILABLE AGE my-deployment 3/3 3 3 20s # view status of pods $ kubectl get pods NAME READY STATUS RESTARTS AGE my-deployment-5d7b664866-5r264 1/1 Running 0 48s my-deployment-5d7b664866-ncccf 1/1 Running 0 48s my-deployment-5d7b664866-qzrwr 1/1 Running 0 48s # existing set of services $ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.101.0.1 443/TCP 12m # create a service for our deployment # loadBalancerIP set to '192.168.142.17' $ kubectl apply -f my-service.yaml service/my-service created # view service and external IP $ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.101.0.1 443/TCP 12m my-service LoadBalancer 10.101.14.121 192.168.142.17 80:31554/TCP 30s # do HTTP request against service IP $ curl -k http://192.168.142.17:80/hello Hello, world! Version: 2.0.0 Hostname: my-deployment-75d45b64f9-sgdjn

If you do not assign an IP address in the deployment, one will be assigned from the user-cluster.yaml ‘loadBalancer.metalLB.addressPools, which ends up in the cluster as a ConfigMap as shown below.

kubectl -n kube-system get configmap metallb-config -o=jsonpath="{.data.config}"

address-pools:

- name: address-pool-1

protocol: layer2

addresses:

- 192.168.142.253/32

- 192.168.142.17-192.168.142.29

avoid-buggy-ips: false

auto-assign: true

This is also available from the host browser at http://192.168.142.17/hello

Deregister and Delete User Cluster

If you want to completely delete and rebuild a User Cluster, you need to be sure to first deregister the original one. Deleting the user cluster does break the connection, but the registration entry is still there until you go to the Hamburger Menu>Kubernetes Engine>Clusters, then select the user cluster and select the “Deregister” action which can be found by scrolling to the right-most part of the row.

As of Anthos 1.8, this deregistration is not supported via gcloud, it must be done from the console UI.

The dialog will then direct you to delete the membership object in the user cluster before doing a ‘gkectl delete’.

# delete membership object kubectl --kubeconfig user1-kubeconfig delete membership membership # delete user cluster gkectl delete cluster --kubeconfig ./kubeconfig --cluster user1

REFERENCES

Anthos, bundled load balancing with MetalLB

github fabianlee, link to diagrams in this blog post (edit on diagrams.net)

kubernetes.io, containerd integration

Ivan Velichko, containerd from CLI

Anthos/Kubernetes table of versions

NOTES

If you need to delete the Admin workstation

delete the VM from vCenter UI, “gke-admin-ws”. If you use the ESXi web admin, it might show as orphaned in vCenter still.

If in admin-ws-config.yaml the ‘dataDiskName’ is prefixed with a folder path, then you need to manually recreate the “datastore1/<folder>” vcenter datastore folder.

# delete current ssh key from seedVM rm ~/.ssh/gke-admin-workstation* # clear known fingerprint for AdminWS ssh-keygen -f ~/.ssh/known_hosts -R 192.168.140.221 # or clear known_hosts completely truncate -s 0 ~/.ssh/known_hosts # remove file noting details on adminWS rm ~/seedvm/gke-admin-ws-*

And if you recreate the admin ws with ‘gkeadm create’, do not use the flag ‘auto-create-service-accounts’ because they already exist.

If you need to delete an admin cluster [1]

The instructions for cluster deletion are basically to clean out the objects in the cluster, then delete the vms from vcenter. That would mean you would just delete the seesaw from vcenter the same way.

# from the admin workstation, you may need to delete the bootstrap cluster sudo docker stop gkectl-control-plane sudo docker rm gkectl-control-plane

Follow the instructions in docs, then delete thefollowing vCenter files:

- “dataDisk” referenced from admin-cluster.yaml which is on the vcenter datastore. Which in our case is “datstore1/admin-disk.vmdk”

- the “admin-disk-checkpoint.yaml” from the vcenter datastore which holds admin cluster state and credentials.

If you need to delete a user cluster [1]

# unregister first from console UI https://console.cloud.google.com/kubernetes/list/overview # delete the user cluster # notice how the kubeconfig must be for the admin cluster # add '--force' if necessary $ gkectl delete cluster --kubeconfig kubeconfig --cluster user1 Deleting user cluster "user1"... DONE Cluster is running... Terminating cluster... Terminating node pools: wait for node pools (pool-1) to be deleted... Terminating cluster... Terminating master node... User cluster "user1" is deleted.

If your user cluster is only partially created, you will have to delete the hub membership either in the web console or cli

gcloud container hub memberships unregister user1

govc to find available entities

# res pools, folders, datastores govc find / -type p govc find / -type f govc find / -type s

If esxi hangs on esxi “nfs41client loaded” then goes to paused state

check for disk full on KVM host pool location

check cqow2 sparse disk size when VM is powered on

sudo qemu-img info seedvm.qcow2 --force-share

Creating resource pools directly under datacenter (if you do not have cluster)

govc find / -type p # create resource pools for admin and user clusters govc pool.create */Resources/admin govc pool.create */Resources/user

generate admin and user cluster templates from the AdminWS per version of gkectl

gkectl create-config admin --config=admin-cluster-blueprint.yaml --gke-on-prem-version=1.13.0-gke.525 gkectl create-config cluster --config=user-cluster-blueprint.yaml --gke-on-prem-version=1.13.0-gke.525

list roles being used by current user of govc

govc role.ls

cloning Ubuntu image to user clusters folder with govc

govc vm.clone -vm=/mydc1/vm/admin-ws/gke-on-prem-ubuntu-1.13.0-gke.525 -folder=/mydc1/vm/userclusters -link=true -on=false -template=true gke-on-prem-ubuntu-1.13.0-gke.525